This article was authored by Neil Savage and originally published to Nature

Bing Liu was road testing a self-driving car, when suddenly something went wrong. The vehicle had been operating smoothly until it reached a T-junction and refused to move. Liu and the car’s other occupants were baffled. The road they were on was deserted, with no pedestrians or other cars in sight. “We looked around, we noticed nothing in the front, or in the back. I mean, there was nothing,” says Liu, a computer engineer at the University of Illinois Chicago.

Stumped, the engineers took over control of the vehicle and drove back to the laboratory to review the trip. They worked out that the car had been stopped by a pebble in the road. It wasn’t something a person would even notice, but when it showed up on the car’s sensors it registered as an unknown object — something the artificial intelligence (AI) system driving the car had not encountered before.

Part of Nature Outlook: Robotics and artificial intelligence

The problem wasn’t with the AI algorithm as such — it performed as intended, stopping short of the unknown object to be on the safe side. The issue was that once the AI had finished its training, using simulations to develop a model that told it the differences between a clear road and an obstacle, it could learn nothing more. When it encountered something that had not been part of its training data, such as the pebble or even a dark spot on the road, the AI did not know how to react. People can build on what they’ve learnt and adapt as their environment changes; most AI systems are locked into what they already know.

In the real world, of course, unexpected situations inevitably arise. Therefore, Liu argues that any system aiming to perform learnt tasks outside a lab needs to be capable of on-the-job learning — supplementing the model it’s already developed with new data that it encounters. The car could, for instance, detect another car driving through a dark patch on the road with no problem, and decide to imitate it, learning in the process that a wet bit of road was not a problem. In the case of the pebble, it could use a voice interface to ask the car’s occupant what to do. If the rider said it was safe to continue, it could drive on, and it could then call on that answer for its next pebble encounter. “If the system can continually learn, this problem is easily solved,” Liu says.

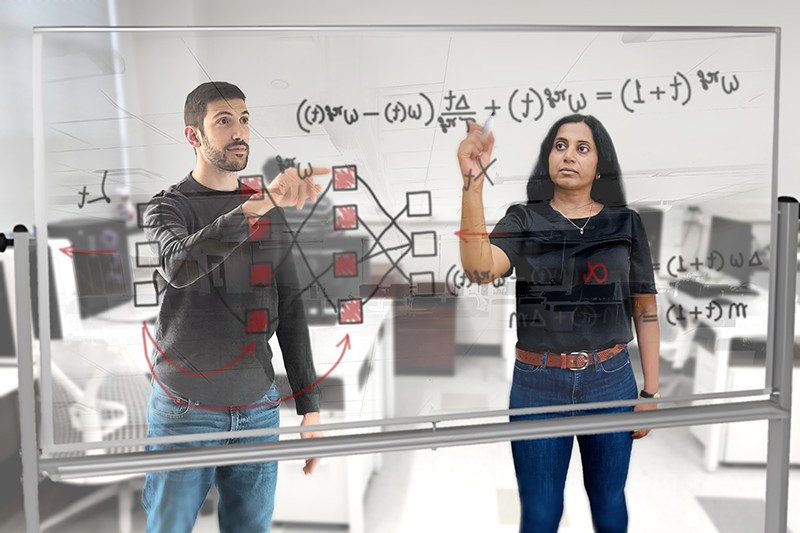

Such continual learning, also known as lifelong learning, is the next step in the evolution of AI. Much AI relies on neural networks, which take data and pass them through a series of computational units, known as artificial neurons, which perform small mathematical functions on the data. Eventually the network develops a statistical model of the data that it can then match to new inputs. Researchers, who have based these neural networks on the operation of the human brain, are looking to humans again for inspiration on how to make AI systems that can keep learning as they encounter new information. Some groups are trying to make computer neurons more complex so they’re more like neurons in living organisms. Others are imitating the growth of new neurons in humans so machines can react to fresh experiences. And some are simulating dream states to overcome a problem of forgetfulness. Lifelong learning is necessary not only for self-driving cars, but for any intelligent system that has to deal with surprises, such as chatbots, which are expected to answer questions about a product or service, and robots that can roam freely and interact with humans. “Pretty much any instance where you deploy AI in the future, you would see the need for lifelong learning,” says Dhireesha Kudithipudi, a computer scientist who directs the MATRIX AI Consortium for Human Well-Being at the University of Texas at San Antonio.

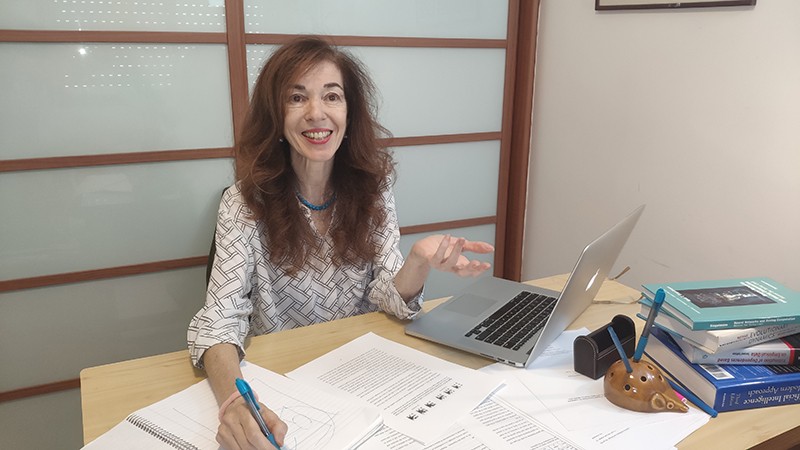

Continual learning will be necessary if AI is to truly live up to its name. “AI, to date, is really not intelligent,” says Hava Siegelmann, a computer scientist at the University of Massachusetts Amherst who created the Lifelong Learning Machines research-funding initiative for the US Defense Advanced Research Projects Agency. “If it’s a neural network, you train it in advance, you give it a data set and that’s all. It does not have the ability to improve with time.”

Model making

In the past decade, computers have become adept at tasks such as classifying cats or tumours in images, identifying sentiment in written language, and winning at chess. Researchers might, for instance, feed the computer photos that have been labelled by humans as containing cats. The computer receives the photos, which it interprets as numerical descriptions of pixels with various colour and brightness values, and runs them through layers of artificial neurons. Each neuron has a randomly chosen weight, a value by which it multiplies the value of the input data. The computer runs the input data through the layers of neurons and checks the output data against validation data to see how accurate the results are. It then repeats the process, altering the weights in each iteration until the output reaches a high accuracy. The process produces a statistical model of the values and the placement of pixels that define a cat. The network can then analyse a new photo and decide whether it matches the model — that is, whether there’s a cat in the picture. But that cat model, once developed, is pretty much set in stone.

One way to get the computer to learn to identify many objects would be to develop lots of models. You could train one neural network to recognize cats and another to recognize dogs. That would require two data sets, one for each animal, and would double the time and computing power needed to develop each model. But suppose you wanted the computer to distinguish between pictures of cats and dogs. You would have to train a third network, either using all the original data or comparing the two existing models. Add other animals into the mix and yet more models must be developed.

Training and storing more models requires greater resources, and this can quickly become a problem. Training a neural network can take reams of data and weeks of time. For instance, an AI system called GPT-3, which learnt to produce text that sounds as if it was written by a human, required almost 15 days of training on 10,000 high-end computer processors1. The ImageNet data set, which is often used to train neural networks in object recognition, contains more than 14 million images. Depending on the subset of the total number of images that is used, it can take from a few minutes to more than a day and a half to download. Any machine that has to spend days re-learning a task each time it encounters new information will essentially grind to a halt.

One system that could make the generation of multiple models more efficient is Self-Net2, created by Rolando Estrada, a computer scientist at Georgia State University in Atlanta, and his students Jaya Mandivarapu and Blake Camp. Self-Net compresses the models, to prevent a system with a lot of different animal models from growing too unwieldy.

The system uses an autoencoder, a separate neural network that learns which parameters — such as clusters of pixels in the case of image-recognition tasks — the original neural network focused on when building its model. One layer of neurons in the middle of the autoencoder forces the machine to pick a tiny subset of the most important weights of the model. There might be 10,000 numerical values going into the model and another 10,000 coming out, but in the middle layer the autoencoder reduces that to just 10 numbers. So the system has to find the ten weights that will allow it to get the most accurate output, Estrada says.

Sign up for Nature’s newsletter on robotics and AI

The process is similar to compressing a large TIFF image file down to a smaller JPEG, he says; there’s a small loss of fidelity, but what is left is good enough. The system tosses out most of the original input data, and then saves the ten best weights. It can then use those to perform the same cat-identification task with almost the same accuracy, without having to store enormous amounts of data.

To streamline the creation of models, computer scientists often use pre-training. Models that are trained to perform similar tasks have to learn similar parameters, at least in the early stages. Any neural network learning to recognize objects in images, for instance, first needs to learn to identify diagonal and vertical lines. There’s no need to start from scratch each time, so newer models can be pre-trained with the weights that already recognize those basic features. To make models that can recognize cows or pigs or kangaroos, Estrada can pre-train other neural networks with the parameters from his autoencoder. Because all animals share some of the same facial features, even if the details of size or shape are different, such pre-training allows new models to be generated more efficiently.

The system is not a perfect way to get networks to learn on the job, Estrada says. A human still has to tell the machine when to switch tasks; for example, when to start looking for horses instead of cows. That requires a human to stay in the loop, and it might not always be obvious to a person that it’s time for the machine to do something different. But Estrada hopes to find a way to automate task switching so the computer can learn to identify characteristics of the input data and use that to decide which model it should use, so it can keep operating without interruption.

Out with the old

It might seem that the obvious course is not to make multiple models but rather to grow a network. Instead of developing two networks for recognizing cats and horses respectively, for instance, it might appear easier to teach the cat-savvy network to also recognize horses. This approach, however, forces AI designers to confront one of the main issues in lifelong learning, a phenomenon known as catastrophic forgetting. A network trained to recognize cats will develop a set of weights across its artificial neurons that are specific to that task. If it is then asked to start identifying horses, it will start readjusting the weights to make it more accurate for horses. The model will no longer contain the right weights for cats, causing it to essentially forget what a cat looks like. “The memory is in the weights. When you train it with new information, you write on the same weights,” says Siegelmann. “You can have a billion examples of a car driving, and now you teach it 200 examples related to some accident that you don’t want to happen, and it may know these 200 cases and forget the billion.”

One method of overcoming catastrophic forgetting uses replay — that is, taking data from a previously learnt task and interweaving them with new training data. This approach, however, runs head-on into the resource problem. “Replay mechanisms are very memory hungry and computationally hungry, so we do not have models that can solve these problems in a resource-efficient way,” Kudithipudi says. There might also be reasons not to store data, such as concerns about privacy or security, or because they belong to someone unwilling to share them indefinitely.

Siegelmann says replay is roughly analogous to what the human brain does when it dreams. Many neuroscientists think that the brain consolidates memories and learns things by replaying experiences during sleep. Similarly, replay in neural networks can reinforce weights that might otherwise be overwritten. But the brain doesn’t actually review a moment-by-moment rerun of its experiences, Siegelmann says. Rather, it reduces those experiences to a handful of characteristic features and patterns — a process known as abstraction — and replays just those parts. Her brain-inspired replay tries to do something similar; instead of reviewing mountains of stored data, it selects certain facets of what it has learnt to replay. Each layer in a neural network, Siegelmann says, moves the learning to a higher level of abstraction, from the specific input data in the bottom layer to mathematical relationships in the data at higher layers. In this way, the system sorts specific examples of objects into classes. She lets the network select the most important of the abstractions in the top couple of layers and replay those. This technique keeps the learnt weights reasonably stable — although not perfectly so — without having to store any previously used data at all.

Because such brain-inspired replay focuses on the most salient points that the network has learnt, the network can find associations between new and old data more easily. The method also helps the network to distinguish between pieces of data that it might not have separated easily before — finding the differences between a pair of identical twins, for example. If you’re down to only a handful of parameters in each set, instead of millions, it’s easier to spot the similarities. “Now, when we replay one with the other, we start looking at the differences,” Siegelmann says. “It forces you to find the separation, the contrast, the associations.”

Focusing on high-level abstractions rather than specifics is useful for continual learning because it allows the computer to make comparisons and draw analogies between different scenarios. For example, if your self-driving car has to work out how to handle driving on ice in Massachusetts, Siegelmann says, it might use data that it has about driving on ice in Michigan. Those examples won’t exactly match the new conditions, because they’re from different roads. But the car also has knowledge about driving on snow in Massachusetts, where it is familiar with the roads. So if the car can identify only the most important differences and similarities between snow and ice, Massachusetts and Michigan, instead of getting bogged down in minor details, it might come up with a solution to the specific, new situation of driving on ice in Massachusetts.

A modular approach

Looking at how the brain handles these issues can inspire ideas, even if they don’t replicate what’s going on biologically. To deal with the need for a neural network that can learn tasks without overwriting the old, scientists take a cue from neurogenesis — the process by which neurons are formed in the brain. A machine can’t grow parts the way a body can, but computer scientists can replicate new neurons in software by generating connections in parts of the system. Although the mature neurons have learnt to react to only certain data inputs, these ‘baby neurons’ can respond to all the input. “They can react to new samples that are fed into the model,” Kudithipudi says. In other words, they can learn from new information while the already-trained neurons retain what they’ve learnt.

Adding more neurons is just one way to enable a system to learn new things. Estrada has come up with another approach, on the basis of the fact that a neural network is only a loose approximation of a human brain. “We call the nodes in a neural network ‘neurons’. But if you see what they’re actually doing, they’re basically computing a weighted sum. It’s an incredibly simplified view of real, biological neurons, which perform all sorts of complex nonlinear signal processing.”

In an effort to mimic some of the complicated behaviours of real neurons more successfully, Estrada and his students developed what he calls deep artificial neurons (DANs)3. A DAN is a small neural network that is treated as a single neuron in a larger neural network.

DANs can be trained for one particular task — for instance, Estrada might develop one for identifying handwritten numbers. The model in the DAN is then fixed, so it can’t be changed and will always provide the same output to other neurons in the still-trainable network layers surrounding it. That larger network can go on to learn a related task, such as identifying numbers written by someone else — but the original model is not forgotten. “You end up with this general-purpose module that you can reuse for similar tasks in the future,” Estrada says. “These modules allow the system to learn to perform the new tasks in a similar way to the old tasks, so that the features are more compatible with each other over time. So that means that the features are more stable and it forgets less.”

So far, Estrada and his colleagues have shown that this technique works on fairly simple tasks, such as number recognition. But they’re trying to adapt it to more challenging problems, including learning how to play old video games such as Space Invaders. “And then, if that’s successful, we could use it for more sophisticated things,” says Estrada. It might, for instance, prove useful in autonomous drones, which are sent out with basic programming but have to adapt to new data in the environment, and will have to do any on-the-fly learning within tight power and processing constraints.

There’s a long way to go before AI can function as people do, dealing with an endless variety of ever-changing scenarios. But if computer scientists can develop the techniques to allow machines to make the continual adaptations that living creatures are capable of, it could go a long way towards making AI systems more versatile, more accurate and more recognizably intelligent.

doi: https://doi.org/10.1038/d41586-022-01962-y

This article is part of Nature Outlook: Robotics and artificial intelligence, an editorially independent supplement produced with the financial support of third parties. About this content.

References

- Patterson, D. et al. Preprint at https://arxiv.org/abs/2104.10350 (2021).

- Mandivarapu, J. K., Camp, B. & Estrada, R. Front. Artif. Intell. 3, 19 (2020).PubMed Article Google Scholar

- Camp, B., Mandivarapu, J. K. & Estrada, R. Preprint at https://arxiv.org/abs/2011.07035 (2020).

This article was authored by Marcus Woo and originally published to Nature

Fork in hand, a robot arm skewers a strawberry from above and delivers it to Tyler Schrenk’s mouth. Sitting in his wheelchair, Schrenk nudges his neck forward to take a bite. Next, the arm goes for a slice of banana, then a carrot. Each motion it performs by itself, on Schrenk’s spoken command.

For Schrenk, who became paralysed from the neck down after a diving accident in 2012, such a device would make a huge difference in his daily life if it were in his home. “Getting used to someone else feeding me was one of the strangest things I had to transition to,” he says. “It would definitely help with my well-being and my mental health.”

His home is already fitted with voice-activated power switches and door openers, enabling him to be independent for about 10 hours a day without a caregiver. “I’ve been able to figure most of this out,” he says. “But feeding on my own is not something I can do.” Which is why he wanted to test the feeding robot, dubbed ADA (short for assistive dexterous arm). Cameras located above the fork enable ADA to see what to pick up. But knowing how forcefully to stick a fork into a soft banana or a crunchy carrot, and how tightly to grip the utensil, requires a sense that humans take for granted: “Touch is key,” says Tapomayukh Bhattacharjee, a roboticist at Cornell University in Ithaca, New York, who led the design of ADA while at the University of Washington in Seattle. The robot’s two fingers are equipped with sensors that measure the sideways (or shear) force when holding the fork1. The system is just one example of a growing effort to endow robots with a sense of touch.

Part of Nature Outlook: Robotics and artificial intelligence

“The really important things involve manipulation, involve the robot reaching out and changing something about the world,” says Ted Adelson, a computer-vision specialist at the Massachusetts Institute of Technology (MIT) in Cambridge. Only with tactile feedback can a robot adjust its grip to handle objects of different sizes, shapes and textures. With touch, robots can help people with limited mobility, pick up soft objects such as fruit, handle hazardous materials and even assist in surgery. Tactile sensing also has the potential to improve prosthetics, help people to literally stay in touch from afar, and even has a part to play in fulfilling the fantasy of the all-purpose household robot that will take care of the laundry and dishes. “If we want robots in our home to help us out, then we’d want them to be able to use their hands,” Adelson says. “And if you’re using your hands, you really need a sense of touch.”

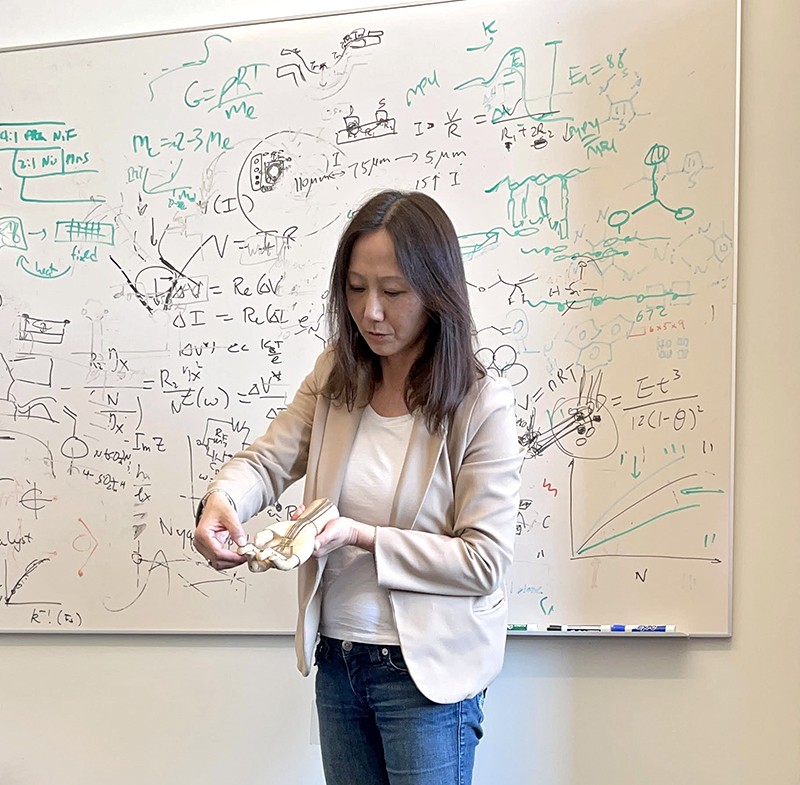

With this goal in mind, and buoyed by advances in machine learning, researchers around the world are developing myriad tactile sensors, from finger-shaped devices to electronic skins. The idea isn’t new, says Veronica Santos, a roboticist at the University of California, Los Angeles. But advances in hardware, computational power and algorithmic knowhow have energized the field. “There is a new sense of excitement about tactile sensing and how to integrate it with robots,” Santos says.

Feel by sight

One of the most promising sensors relies on well-established technology: cameras. Today’s cameras are inexpensive yet powerful, and combined with sophisticated computer vision algorithms, they’ve led to a variety of tactile sensors. Different designs use slightly different techniques, but they all interpret touch by visually capturing how a material deforms on contact.

ADA uses a popular camera-based sensor called GelSight, the first prototype of which was designed by Adelson and his team more than a decade ago2. A light and a camera sit behind a piece of soft rubbery material, which deforms when something presses against it. The camera then captures the deformation with super-human sensitivity, discerning bumps as small as one micrometre. GelSight can also estimate forces, including shear forces, by tracking the motion of a pattern of dots printed on the rubbery material as it deforms2.

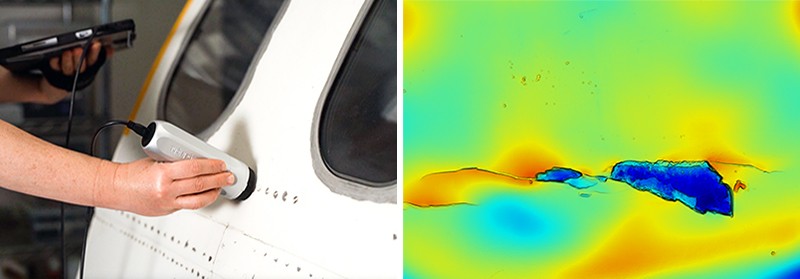

GelSight is not the first or the only camera-based sensor (ADA was tested with another one, called FingerVision). However, its relatively simple and easy-to-manufacture design has so far set it apart, says Roberto Calandra, a research scientist at Meta AI (formerly Facebook AI) in Menlo Park, California, who has collaborated with Adelson. In 2011, Adelson co-founded a company, also called GelSight, based on the technology he had developed. The firm, which is based in Waltham, Massachusetts, has focused its efforts on industries such as aerospace, using the sensor technology to inspect for cracks and defects on surfaces.

One of the latest camera-based sensors is called Insight, documented this year by Huanbo Sun, Katherine Kuchenbecker and Georg Martius at the Max Planck Institute for Intelligent Systems in Stuttgart, Germany3. The finger-like device consists of a soft, opaque, tent-like dome held up with thin struts, hiding a camera inside.

It’s not as sensitive as GelSight, but it offers other advantages. GelSight is limited to sensing contact on a small, flat patch, whereas Insight detects touch all around its finger in 3D, Kuchenbecker says. Insight’s silicone surface is also easier to fabricate, and it determines forces more precisely. Kuchenbecker says that Insight’s bumpy interior surface makes forces easier to see, and unlike GelSight’s method of first determining the geometry of the deformed rubber surface and then calculating the forces involved, Insight determines forces directly from how light hits its camera. Kuchenbecker thinks this makes Insight a better option for a robot that needs to grab and manipulate objects; Insight was designed to form the tips of a three-digit robot gripper called TriFinger.

Skin solutions

Camera-based sensors are not perfect. For example, they cannot sense invisible forces, such as the magnitude of tension of a taut rope or wire. A camera’s frame-rate might also not be quick enough to capture fleeting sensations, such as a slipping grip, Santos says. And squeezing a relatively bulky camera-based sensor into a robot finger or hand, which might already be crowded with other sensors or actuators (the components that allow the hand to move) can also pose a challenge.

This is one reason other researchers are designing flat and flexible devices that can wrap around a robot appendage. Zhenan Bao, a chemical engineer at Stanford University in California, is designing skins that incorporate flexible electronics and replicate the body’s ability to sense touch. In 2018, for example, her group created a skin that detects the direction of shear forces by mimicking the bumpy structure of a below-surface layer of human skin called the spinosum4.

When a gentle touch presses the outer layer of human skin against the dome-like bumps of the spinosum, receptors in the bumps feel the pressure. A firmer touch activates deeper-lying receptors found below the bumps, distinguishing a hard touch from a soft one. And a sideways force is felt as pressure pushing on the side of the bumps.

Bao’s electronic skin similarly features a bumpy structure that senses the intensity and direction of forces. Each one-millimetre bump is covered with 25 capacitors, which store electrical energy and act as individual sensors. When the layers are pressed together, the amount of stored energy changes. Because the sensors are so small, Bao says, a patch of electronic skin can pack in a lot of them, enabling the skin to sense forces accurately and aiding a robot to perform complex manipulations of an object.

To test the skin, the researchers attached a patch to the fingertip of a rubber glove worn by a robot hand. The hand could pat the top of a raspberry and pick up a ping-pong ball without crushing either.

Although other electronic skins might not be as sensor-dense, they tend to be easier to fabricate. In 2020, Benjamin Tee, a former student of Bao who now leads his own laboratory at the National University of Singapore, developed a sponge-like polymer that can sense shear forces5. Moreover, similar to human skin, it is self-healing: after being torn or cut, it fuses back together when heated and stays stretchy, which is useful for dealing with wear and tear.

The material, dubbed AiFoam, is embedded with flexible copper wire electrodes, roughly emulating how nerves are distributed in human skin. When touched, the foam deforms and the electrodes squeeze together, which changes the electrical current travelling through it. This allows both the strength and direction of forces to be measured. AiFoam can even sense a person’s presence just before they make contact — when their finger comes within a few centimetres, it lowers the electric field between the foam’s electrodes.

Last November, researchers at Meta AI and Carnegie Mellon University in Pittsburgh, Pennsylvania, announced a touch-sensitive skin comprising a rubbery material embedded with magnetic particles6. Dubbed ReSkin, when it deforms the particles move along with it, changing the magnetic field. It is designed to be easily replaced — it can be peeled off and a fresh skin installed without requiring complex recalibration — and 100 sensors can be produced for less than US$6.

Rather than being universal tools, different skins and sensors will probably lend themselves to particular purposes. Bhattacharjee and his colleagues, for example, have created a stretchable sleeve that fits over a robot arm and is useful for sensing incidental contact between a robotic arm and its environment7. The sheet is made from layered fabric that detects changes in electrical resistance when pressure is applied to it. It can’t detect shear forces, but it can cover a broad area and wrap around a robot’s joints.

Bhattacharjee is using the sleeve to identify not just when a robotic arm comes into contact with something as it moves through a cluttered environment, but also what it bumps up against. If a helper robot in a home brushed against a curtain while reaching for an object, it might be fine for it to continue, but contact with a fragile wine glass would require evasive action.

Other approaches use air to provide a sense of touch. Some robots use suction grippers to pick up and move objects in warehouses or in the oceans. In these cases, Hannah Stuart, a mechanical engineer at the University of California, Berkeley, is hoping that measuring suction airflow can provide tactile feedback to a robot. Her group has shown that the rate of airflow can determine the strength of the suction gripper’s hold and even the roughness of the surface it is suckered on to8. And underwater, it can reveal how an object moves while being held by a suction-aided robot hand9.

Processing feelings

Today’s tactile technologies are diverse, Kuchenbecker says. “There are multiple feasible options, and people can build on the work of others,” she says. But designing and building sensors is only the start. Researchers then have to integrate them into a robot, which must then work out how to use a sensor’s information to execute a task. “That’s actually going to be the hardest part,” Adelson says.

For electronic skins that contain a multitude of sensors, processing and analysing data from them all would be computationally and energy intensive. To handle so many data, researchers such as Bao are taking inspiration from the human nervous system, which processes a constant flood of signals with ease. Computer scientists have been trying to mimic the nervous system with neuromorphic computers for more than 30 years. But Bao’s goal is to combine a neuromorphic approach with a flexible skin that could integrate with the body seamlessly — for example, on a bionic arm.

Sign up for Nature’s newsletter on robotics and AI

Unlike in other tactile sensors, Bao’s skins deliver sensory signals as electrical pulses, such as those in biological nerves. Information is stored not in the intensity of the pulses, which can wane as a signal travels, but instead in their frequency. As a result, the signal won’t lose much information as the range increases, she explains.

Pulses from multiple sensors would meet at devices called synaptic transistors, which combine the signals into a pattern of pulses — similar to what happens when nerves meet at synaptic junctions. Then, instead of processing signals from every sensor, a machine-learning algorithm needs only to analyse the signals from several synaptic junctions, learning whether those patterns correspond to, say, the fuzz of a sweater or the grip of a ball.

In 2018, Bao’s lab built this capability into a simple, flexible, artificial nerve system that could identify Braille characters10. When attached to a cockroach’s leg, the device could stimulate the insect’s nerves — demonstrating the potential for a prosthetic device that could integrate with a living creature’s nervous system.

Ultimately, to make sense of sensor data, a robot must rely on machine learning. Conventionally, processing a sensor’s raw data was tedious and difficult, Calandra says. To understand the raw data and convert them into physically meaningful numbers such as force, roboticists had to calibrate and characterize the sensor. With machine learning, roboticists can skip these laborious steps. The algorithms enable a computer to sift through a huge amount of raw data and identify meaningful patterns by itself. These patterns — which can represent a sufficiently tight grip or a rough texture — can be learnt from training data or from computer simulations of its intended task, and then applied in real-life scenarios.

“We’ve really just begun to explore artificial intelligence for touch sensing,” Calandra says. “We are nowhere near the maturity of other fields like computer vision or natural language processing.” Computer-vision data are based on a two-dimensional array of pixels, an approach that computer scientists have exploited to develop better algorithms, he says. But researchers still don’t fully know what a comparable structure might be for tactile data. Understanding the structure for those data, and learning how to take advantage of them to create better algorithms, will be one of the biggest challenges of the next decade.

Barrier removal

The boom in machine learning and the variety of emerging hardware bodes well for the future of tactile sensing. But the plethora of technologies is also a challenge, researchers say. Because so many labs have their own prototype hardware, software and even data formats, scientists have a difficult time comparing devices and building on one another’s work. And if roboticists want to incorporate touch sensing into their work for the first time, they would have to build their own sensors from scratch — an often expensive task, and not necessarily in their area of expertise.

This is why, last November, GelSight and Meta AI announced a partnership to manufacture a camera-based fingertip-like sensor called DIGIT. With a listed price of $300, the device is designed to be a standard, relatively cheap, off-the-shelf sensor that can be used in any robot. “It definitely helps the robotics community, because the community has been hindered by the high cost of hardware,” Santos says.

Depending on the task, however, you don’t always need such advanced hardware. In a paper published in 2019, a group at MIT led by Subramanian Sundaram built sensors by sandwiching a few layers of material together, which change electrical resistance when under pressure11. These sensors were then incorporated into gloves, at a total material cost of just $10. When aided by machine learning, even a tool as simple as this can help roboticists to better understand the nuances of grip, Sundaram says.

Not every roboticist is a machine-learning specialist, either. To aid with this, Meta AI has released open source software for researchers to use. “My hope is by open-sourcing this ecosystem, we’re lowering the entry bar for new researchers who want to approach the problem,” Calandra says. “This is really the beginning.”

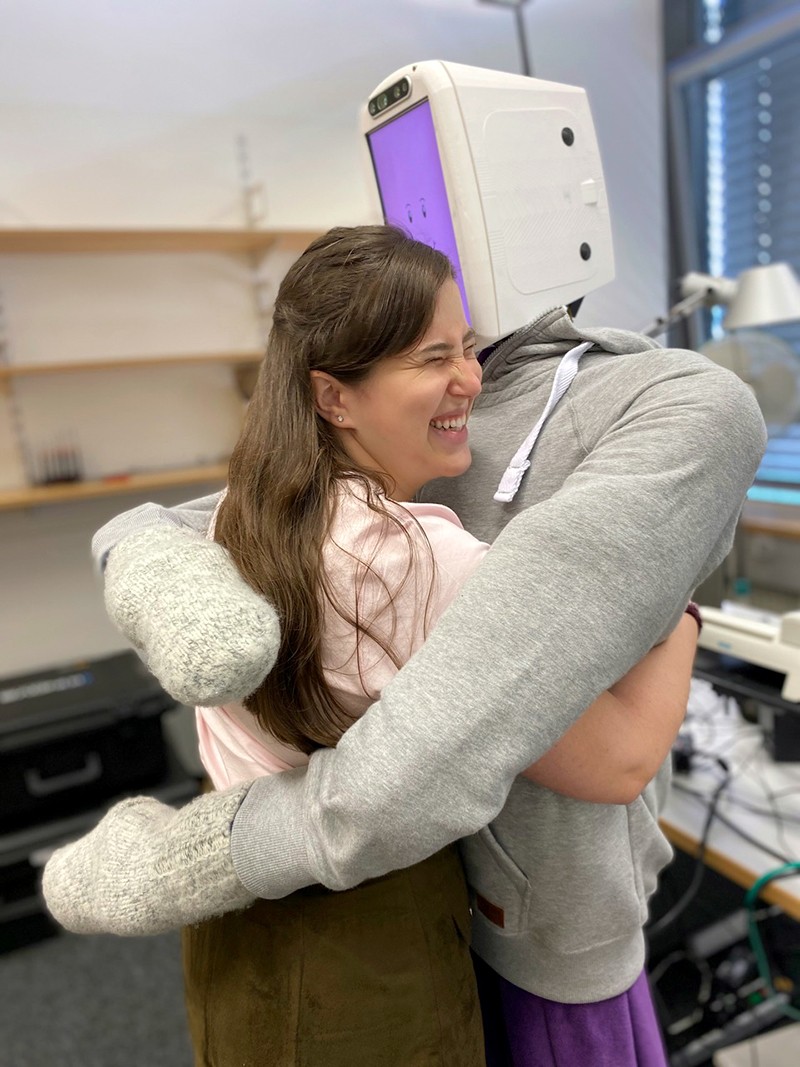

Although grip and dexterity continue to be a focus of robotics, that’s not all tactile sensing is useful for. A soft, slithering robot, might need to feel its way around to navigate rubble as part of search and rescue operations, for instance. Or a robot might simply need to feel a pat on the back: Kuchenbecker and her student Alexis Block have built a robot with torque sensors in its arms and a pressure sensor and microphone inside a soft, inflatable body that can give a comfortable and pleasant hug, and then release when you let go. That kind of human-like touch is essential to many robots that will interact with people, including prosthetics, domestic helpers and remote avatars. These are the areas in which tactile sensing might be most important, Santos says. “It’s really going to be the human–robot interaction that’s going to drive it.”

So far, robotic touch is confined mainly to research labs. “There’s a need for it, but the market isn’t quite there,” Santos says. But some of those who have been given a taste of what might be achievable are already impressed. Schrenk’s tests of ADA, the feeding robot, provided a tantalizing glimpse of independence. “It was just really cool,” he says. “It was a look into the future for what might be possible for me.”

doi: https://doi.org/10.1038/d41586-022-01401-y

This article is part of Nature Outlook: Robotics and artificial intelligence, an editorially independent supplement produced with the financial support of third parties. About this content.

References

- Song, H., Bhattacharjee, T. & Srinivasa, S. S. 2019 International Conference on Robotics and Automation 8367–8373 (IEEE, 2019).Google Scholar

- Yuan, W., Dong, S. & Adelson, E. H. Sensors 17, 2762 (2017).Article Google Scholar

- Sun, H., Kuchenbecker, K. J. & Martius, G. Nature Mach. Intell. 4, 135–145 (2022).Article Google Scholar

- Boutry, C. M. et al. Sci. Robot. 3, aau6914 (2018).Article Google Scholar

- Guo, H. et al. Nature Commun. 11, 5747 (2020).PubMed Article Google Scholar

- Bhirangi, R., Hellebrekers, T., Majidi, C. & Gupta, A. Preprint at http://arxiv.org/abs/2111.00071 (2021).

- Wade, J., Bhattacharjee, T., Williams, R. D. & Kemp, C. C. Robot. Auton. Syst. 96, 1–14 (2017).Article Google Scholar

- Huh, T. M. et al. 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems 1786–1793 (IEEE, 2021).Google Scholar

- Nadeau, P., Abbott, M., Melville, D. & Stuart, H. S. 2020 IEEE International Conference on Robotics and Automation 3701–3707 (IEEE, 2020).Google Scholar

- Kim, Y. et al. Science 360, 998–1003 (2018).PubMed Article Google Scholar

- Sundaram, S. et al. Nature 569, 698–702 (2019).PubMed Article Google Scholar