After super-powerful chatbots such as ChatGPT-4 started becoming widely available this year, school administrators around the world moved to ban the technology from classroom education. Nearly half a dozen US districts blocked access to AI and other multimodal large language models (MLLMs) on school devices and networks, and some Australian schools turned to pen-and-paper exams after students were caught using chatbots to write essays.

Teacher resistance reached its peak when ChatGPT-4 was released in March 2023. Developed by San Francisco-based OpenAI, this generative AI can write poetry and songs, and it passed the US bar exam in the 90th percentile. MLLMs can process images as well as text, and they answer queries by looking for patterns in online data.

When asked why Seattle schools had moved to restrict ChatGPT-4 from district-owned devices, a spokesperson for the district, Tim Robinson responded: “Generative AI makes it possible to produce non-original work, and the school district requires original work and thought from students.”

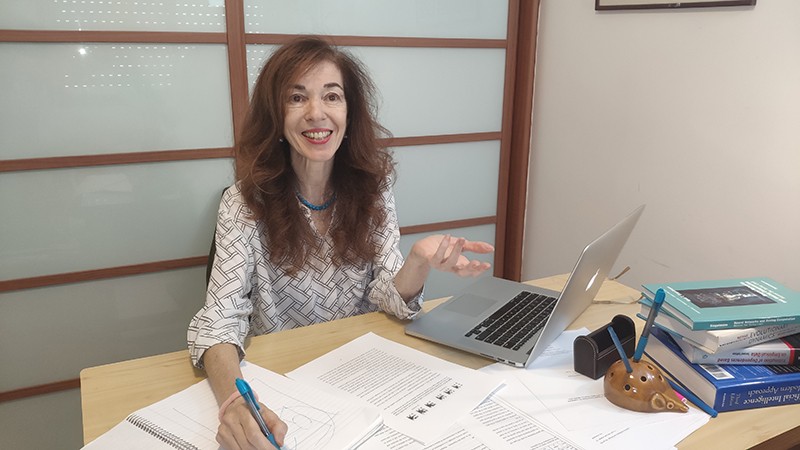

However, confronted with AI’s seemingly inevitable growth, many schools are now reversing course, albeit carefully. “There’s still a fear that students will use large language models as shortcuts instead of practicing to become better writers,” says Tamara Tate, a project scientist at the University of California, Irvine’s Digital Learning Lab. She adds that if AI is here to stay then students might be better served by educational strategies that promote creative uses of the technology. “These tools can provide students with in-the-moment learning partners on a huge range of topics.”

In the view of Tate and other experts, MLLMs have several positive educational roles to play, including encouraging students to evaluate answers rather than automatically accepting them. Careful thought is needed to ensure that these potential upsides are realized, however, and to mitigate any potential downsides. How might AI-assisted education unfold?

Classroom gains and losses

Proponents of the educational uses of generative AI point to several advantages. For one thing, ChatGPT-4 has an extraordinary command of proper sentence structure, which Tate says could be especially useful for non-native speakers seeking insight into how to correctly incorporate words and phrases in real-world settings.

Xiaoming Zhai, a visiting professor who studies applications for machine learning in science education at the University of Georgia in Athens, believes that teachers also stand to benefit from using models like ChatGPT as teaching aids. The models can generate personalized lesson plans and other resources geared to the needs of individual students while assisting with grading and other mundane tasks. In Zhai’s view, that capability frees time so that teachers can provide students with more one-on-one feedback. By efficiently automating basic tasks like searching out relevant literature and materials and summarizing content, the models allow students and teachers alike to “focus more on creative thinking”.

Creative thinking will help people get the most from MLLMs. “Large language models are like search engines: garbage in, garbage out,” Tate wrote in a recent preprint paper.

Teachers can help their students develop expert prompting and search optimization strategies to generate the most helpful content. “To use the technology effectively, students need to double down on the work of revision,” Tate says. “ChatGPT-4 can generate a fluent first-draft response, but not a lot of deep content. The responses can be vague and often wrong.”

While researching this article, we asked ChatGPT-4 to tell us, in its own words, why it would be a helpful tool for education. Seconds later, the model provided a detailed answer in which it claimed it had access to vast amounts of knowledge and could respond instantly to questions in multiple languages at any time. But the model was also candid about its limitations, pointing out that if ChatGPT-4 doesn’t understand the nuances of a particular question, then it might deliver incomplete or erroneous information that could be problematic for students who rely solely on the model for answers.

Given that MLLMs may fail to support their claims with reasons or evidence, this gives teachers the opportunity to demonstrate the need for critical reasoning. “Students need to think about who said what and why in a given response,” Tate says.

Lea Bishop, a law professor at Indiana University’s Robert H. McKinney School of Law in Indianapolis, agrees that potential inaccuracies will require students to scrutinize the model’s output. “You have to develop the habit of questioning everything you see,” she says. “That means asking probing follow-up questions and triangulating with other sources of knowledge to see what matches up. I need you to show me that you’re better than the computer.”

Dealing with cheating and secrecy

Some experts worry that, for less motivated students, these sorts of models provide a tempting source of ready-made content that diminishes critical thinking skills. The predecessors to ChatGPT-4 proved themselves capable of generating essays and responses to short-answer and multiple choice exam questions. “We already have a lot of problems with students who feel that learning equates to searching, copying and pasting,” says Paulo Blikstein, an associate professor of communications, media, and learning technologies at Columbia University, in New York. “With AI, we have an even greater risk that some will take the shortest and easiest path, and incorporate those heuristics and methods as a default mode.”

Teachers can try to flag AI-generated content with software packages called output detectors. But these packages have questionable reliability, and in July 2023, OpenAI discontinued its own output detector citing concerns over low accuracy. Experts worry that models like ChatGPT-4 will increasingly put teachers into the unwanted role of having to police students who break rules on AI-generated content.

Such concerns are valid, and contributed to the initial negative responses. Blikstein says early school restrictions may be seen as a “knee-jerk reaction against something that is still very hard to understand”.

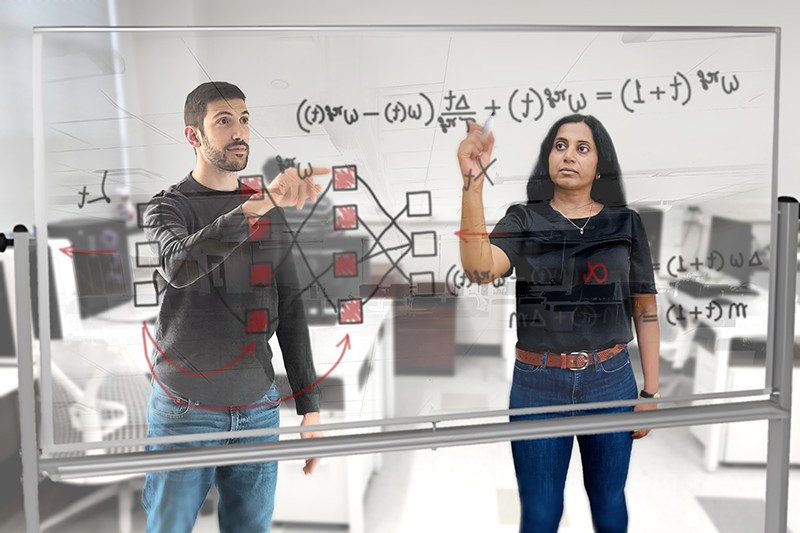

And although these bans are gradually being lifted, ChatGPT is not yet in the clear: its workings remain opaque, even to the experts. Between its inputs and outputs are billions of ‘black-box’ computations. ChatGPT is said to be OpenAI’s most secretive release yet. The company hasn’t disclosed anything about how the model was trained, and proprietary systems developed by competing companies are now driving an AI ‘arms race’ — advancing at mind-boggling speed.

Defining core skills

Does the rise of MLLMs mean writing itself will go the way of older skills, in much the same way that basic mathematical competence was rendered nearly obsolete by calculators? Experts offer a range of opinions. Taking a bullish stance, Bishop argues that functional writing skills such as spelling, grammar, and knowledge of how to organize a standard essay “will be totally obsolete two years from now”. Others see need for caution. “Without practice writing their own content, it will be hard for students to predict where and how writing mistakes are made — and then spot them in AI-generated content,” Tate says.

In Blikstein’s view, this grey area underscores a need to proceed slowly. “The stakes are high with language,” he says, adding that generative AI can be a powerful partner for enhancing — not replacing — a student’s cognition. But important questions remain. “For instance, we don’t have a good model for authorship in the area of AI-generated content,” he says. “The text appears out of the ether, and we have no idea where it came from.” For accomplished professionals, using AI to boost writing skills may not pose much of a problem. “But that’s not true for younger people who don’t understand the craft of writing to begin with,” he adds.

Blikstein also worries that AI might perpetuate educational inequities. Wealthier school districts have resources to apply the technology with an emphasis on human interaction and project-based learning, while poorer schools might move increasingly towards automation to save money. “If you settle for something cheap, it can take over your whole system,” he says. “Then five years later, it’s the new normal,” he says.

Ultimately, AI could offer an evolution in educational norms that sends educators back to basics. “We have to identify the core competencies that we want our students to have,” says Zhao. “How are we going to incorporate models like ChatGPT into the learning process? We are preparing future citizens, and if AI will be available, then we need to think about how we build competence in education so that students can be successful.”

Explore FII’s publications site for more thought-provoking articles and podcasts about artificial intelligence and the impact of technology on society.

Mobile robots can dance around a stage, perform graceful acrobatics and even lift heavy objects. But if you watched them strut their stuff for an hour or two, you would see the robots grind to a halt. Like humans, mobile robots eventually exhaust the energy that they carry, and need a recharge.

This problem is specific to mobile robots. Robots anchored to a factory floor can do heavy work all day and all night because they can draw inexhaustible energy from the electric grid. Mobility gives robots more flexibility, but at the cost of needing to recharge their energy sources — in most cases, some form of battery.Part of Nature Outlook: Robotics and artificial intelligence

The compact nature of smartphones can fool us into thinking batteries are featherweight objects. That illusion arises because modern electronics need only a trickle of energy to send signals or process data. But transporting robots or people around, or lifting a heavy load, takes much more energy. If you pick up a cordless tool, you will feel that it outweighs a corded one. An electric car that can travel for five hours (around 500 kilometres, the distance from Paris to Amsterdam) at motorway speed between recharges needs batteries that account for one-third or more of the total vehicle weight.

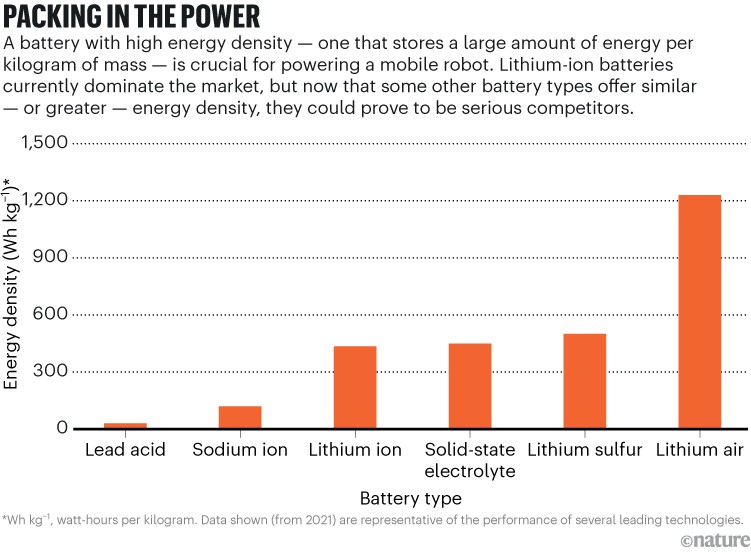

Mobile robots on legs, however, can’t tolerate such massive batteries. Boston Dynamics, a robotics company in Waltham, Massachusetts, sells a four-legged dog-size robot called Spot that weighs about 32 kg — one-eighth of which is batteries. But, the company states it has a typical run-time of only 90 minutes. Humanoid robots that have been developed to walk with heavy loads have the same limitations. Atlas, the company’s 1.5 metre, 89-kilogram humanoid demonstrator with two arms and two legs, can do gymnastics and lift heavy objects. But the company does not say how long it can run before it needs a recharge. For mobile robots to be more capable workers, their batteries will need greater energy density — that is, they will need to pack more watt-hours of energy into fewer kilograms of mass. “Energy density is still quite far from the power we need for robotics,” says Ravinder Dahiya, an electrical engineer specializing in robotics at Northeastern University in Boston, Massachusetts.

How serious the energy-density problem is depends on the robot’s size and structure, its function and how much energy it needs. Robots that walk can navigate stairs, the interiors of buildings and rough terrain better than wheels — but they can’t carry as big of a battery pack. Sustained flight requires even more energy, making battery weight a serious limit for anything much bigger than insect size.

The limits of lithium

Batteries have come a long way since the Italian physicist Alessandro Volta invented the earliest version of this technology in 1800. Today, the state-of-the-art power source is the lithium-ion battery, invented in the 1970s by chemist Stanley Whittingham, and now widely used in phones, laptops, tools and electric vehicles. The technology earned Whittingham, now at the State University of New York at Binghamton, a share of the 2019 Nobel Prize in Chemistry.

Batteries don’t generate energy; they store energy produced by chemical reactions that yield positive ions and electrons. The ions accumulate at one end of the battery, called the cathode, and the electrons at the other end, called the anode. The ions and electrons sit on these two ends until they are connected by a conductor, which completes the circuit and allows the electrons to flow as a current from the anode to deliver electrical power to an externally connected load — such as an electric motor — and then to the cathode. When the chemicals are used up, the battery must either be replaced or recharged by passing a current through it in the opposite direction, to reverse the reaction.

A major advantage of batteries is that they directly deliver energy in the form of electricity, whereas fossil fuels have to be burned to generate heat that drives an electrical generator. This avoids carbon emissions on the spot, although total emissions depend on how the original energy was produced. However, robots must carry the energy they use, and batteries weigh more and occupy more space than fossil fuels. An electric car, for example, needs a battery pack much larger and heavier than a fuel tank.

When lithium-ion batteries reached the market in 1991, they provided 80 watt-hours of electrical energy per kilogram of battery weight1. That meant it took a one kilogram battery to power a (then standard) 60-watt incandescent bulb for one hour and 20 minutes, making it the best battery available. Now, typical commercial lithium-ion batteries carry three times more energy per kilogram. But even such energy-packed batteries are too hefty for a walking robot to lug around.

Chemistry quest

Lithium-ion batteries are running out of steam. The chemistry has “less and less room for improvement”, says Richard Schmuch, a chemist at the Fraunhofer Research Institution for Battery Cell Production in Münster, Germany. Lithium itself is rare and expensive. The same is true for cobalt, another crucial element which can make up to 20% of the weight of the cathode in lithium-ion batteries for electric vehicles. Extracting both elements requires large amounts of energy and water. Moreover, the mining of cobalt has been linked to the exploitation of workers.

Another concern is optimizing batteries to meet the needs of robotics. “The lithium-ion battery is quite versatile,” says Schmuch. “You can adjust it for different types of operating condition,” from smartphones to cars to robots. Yet it can’t do everything cost-effectively and well. He expects new types of battery will be needed to serve the emerging demands of robotics as well as other applications.

After more than 30 years of development, lithium-ion batteries are considered to be a mature technology. Still, efforts continue to improve these complex electrochemical systems. They are assembled in units called cells, which are packaged together to provide a desired electrical output. Each cell contains — in addition to the anode and cathode — an electrolyte through which ions can move, a separator to prevent short circuits and electrical terminals that connect to other cells in the packaged battery. Extensive research has gone into the composition of each part to achieve high energy density, charging and discharging rates, reliability and longevity. Among the important successes of this technology are batteries that can be recharged as many as 6,000 times.

One attempt to enhance the performance of lithium entails making the anode and cathode from nanostructured sulfur-graphite composites rather than from standard graphite. Such lithium–sulfur batteries offer the potential of lower costs and higher energy density. These batteries have yet to be commercialized successfully, however; their use might be limited to specialized applications, such as aviation, for which minimizing battery weight is crucial to get off the ground. Battery features could be tailored by adjusting design details, such as the type of nanostructure used and how the ions and electrons flow through the battery. But what many developers want is new battery chemistries designed to meet a variety of needs (see ‘Packing in the power’).

That might entail stepping back from lithium’s biggest attraction — it is the lightest metal among the elements, with an atomic weight of seven. Yet, although lithium is absolutely essential to the battery’s energy storage and release, other materials make up more of the battery’s mass. Including the packaging, only about 1% of the weight of a lithium-ion battery is lithium (most of that in the cathode). The cathode also contains more of four other metals: cobalt, nickel, aluminium and manganese. Several problems with lithium and cobalt have led to serious interest in sodium-ion batteries.

Like lithium, sodium is an alkali metal, and the chemistry of the two is so similar that researchers have pursued sodium-ion batteries as a way around the problems with lithium. One important advantage of the sodium-ion design is the ready availability of sodium in seawater and salt deposits — avoiding the supply-chain problems arising from the cost and scarcity of lithium. Sodium-ion batteries are a bit heavier per kilowatt-hour of energy — sodium’s atomic weight is 23, more than triple that of lithium. Still, lower material costs are expected to make sodium-ion batteries significantly cheaper. An even bigger benefit of switching to sodium would come from reducing or eliminating the need for cobalt in the cathode, which has been demonstrated in several samples.

“Sodium ions are definitely gaining traction,” says Schmuch, citing development of them in Germany, where Fraunhofer is working with industry, and in China, where Contemporary Amperex Technology (CATL) in Ningde — the world’s leading manufacturer of lithium-ion batteries for electric vehicles — rolled out the first generation of its sodium-ion battery in 2021. This April, Chery Automobile in Wuhu, China, announced plans to install CATL sodium-ion batteries in its cars. Also in April, CATL said it had developed a new electric vehicle battery with an energy density of 500 watt-hours per kilogram. This battery employs a different technology, which CATL have not identified.

Solid-state solutions

Another way to change battery chemistry is to change the state of the electrolytes, replacing the conductive liquids used in lithium-ion batteries with conductive solids. Advocates think such solid-state batteries offer the best prospects for preventing the potentially deadly fires seen with lithium batteries, as well as for improving energy density and reducing costs.

The fire hazard comes from filamentary deposits of metallic lithium called dendrites that grow in electrolytes in the batteries. Lithium atoms present in the solvent crystallize to form metal filaments that spread like plant roots. The metallic lithium is conductive, and as the dendrites spread they can short circuit the battery and ignite fires. Sodium is much less prone to dendrite formation, and developers think that this quality makes sodium-ion batteries significantly safer than lithium-ion batteries. They would also be lower cost and in the long term potentially offer higher energy density.

A wide range of solid-state batteries are in development. Lithium is still a popular material because of its light weight, high energy density and rechargeability. But some researchers are exploring other metals with the hope of avoiding the known problems of lithium.

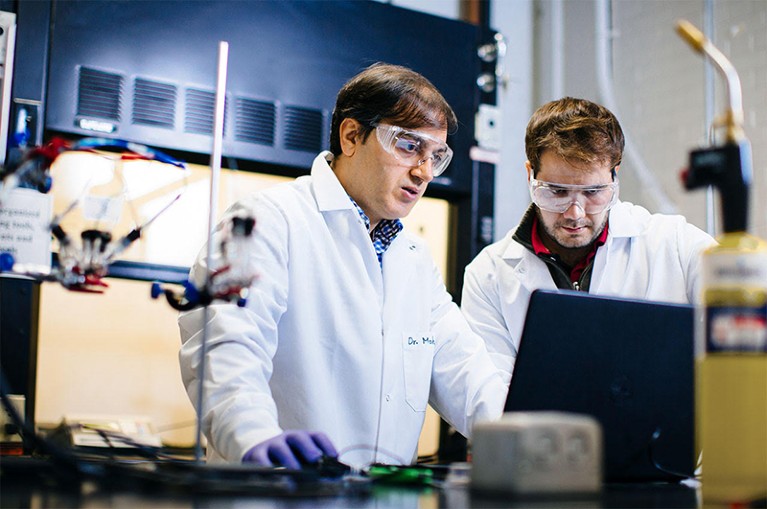

Switching one type of lithium battery in particular from liquid to solid-state electrolytes has led to a big advance in efficiency. This beneficiary is the lithium-air battery, which produces power from the oxidation of lithium atoms by oxygen from the air. As with sodium-ion batteries, energy density was not the main goal for many people working on solid-state batteries. “The reason for developing a solid-state electrolyte was to make the lithium-air battery more safe and to make recharging cycles more stable,” says Mohammad Asadi, a chemical engineer at the Illinois Institute of Technology in Chicago.

However, when Asadi and his colleagues from Argonne National Laboratory in Lemont, Illinois, built an experimental solid-state lithium-air battery2, they were surprised to discover that the technology brought significant benefits in energy density as well. The device transferred four electrons per reaction rather than one or two electrons per reaction that lithium-air batteries normally produce. As a result, Asadi says, the solid electrolyte “helps us store three to four times more energy per unit weight” than is possible with conventional lithium-ion batteries.

In fact, their new solid electrolyte changed the chemistry between oxygen and lithium. In standard lithium-air batteries, oxygen molecules from the air react with lithium atoms to produce one of two compounds. The reaction between one lithium atom and one oxygen molecule produces lithium superoxide (LiO2), which yields one electron. The reaction between two lithium atoms and one oxygen molecule produces lithium peroxide (Li2O2), which yields two electrons.

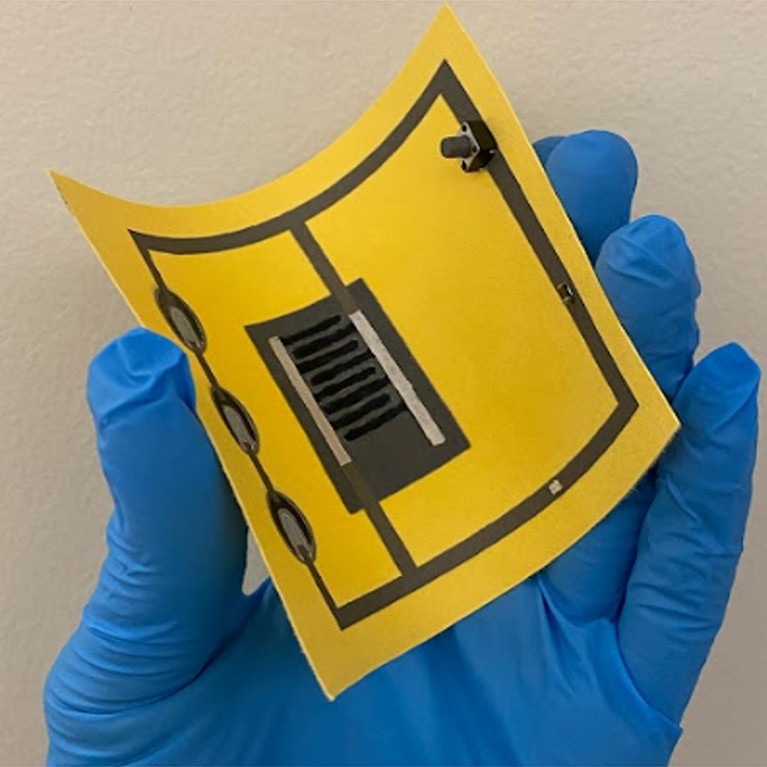

Asadi’s team made its solid electrolyte by combining nanoparticles containing lithium, germanium, phosphorous and sulfur (Li10GeP2S12) with a polymer. In this structure, two lithium atoms can combine with a single oxygen atom to yield lithium oxide (Li2O) and four electrons. That’s hard to do because it requires splitting an oxygen molecule (O2) to produce a single oxygen atom. The test cell, only the size of a coin, was a proof of concept. According to Asadi, this prototype shows that it will be possible to attain a specific energy of one kilowatt-hour per kilogram — higher than is possible with today’s lithium-ion technology.

Power-studded structures

Increasing the energy density of batteries makes it possible for these power packs to weigh less, which in turn would allow mobile robots of a given size to do more work. Battery design for such robots involves much more than chemistry. And one way to realize this would be to have smaller batteries serve as structural elements of a robot — not just storing energy but also becoming parts of its torso and legs to help it walk and balance. The idea comes from biology. Our bones are not just structural support — they also contain bone marrow that produces blood cells. “Multifunctionality is critical” when you’re building robots that move around, says Nicholas Kotov, a chemical engineer at the University of Michigan in Ann Arbor.

“Robots are biomimetic, and the smaller the robot, the more biological concepts would need to be there,” Kotov says. He and other roboticists call two-legged robots humanoid because they walk upright and have similar body mechanics to people. “We want to keep robots light and consuming as small as an amount of energy as possible,” Kotov says. “If a battery just sits there and does nothing else [but provide power], it is not enough.”

Kotov’s group is particularly interested in drones, in which, he says “every gram counts, and if the battery can serve multiple functions, we can have more functional space”. His team is now working on structural batteries for military drones, although not much of that work has been disclosed yet. Military laboratories have also worked on humanoid robots for missions such as working inside radiation zones and checking for insurgents hiding inside buildings in combat zones.

Some materials used for battery energy storage are particularly well-suited for also being structural elements. For example, Kotov says, “zinc is a very good case for structural batteries”. It is inexpensive, stores energy well and the metal is stable in air. His lab demonstrated a biomorphic zinc battery that could store 72 times more energy than a lithium battery of the same volume3. However, trade-offs are

inevitable. Zinc batteries have limited rechargeability, so they would be best kept stashed away for infrequent use.Another promising multifunctional material is aluminium, which, last year, showed rapid rechargeability over hundreds of cycles at temperatures up to just above the boiling point of water — and without forming aluminium dendrites4. The researchers project a cost of less than one-sixth of that of comparable lithium-ion batteries with a similar energy capacity.

Kotov is also developing aramid fibres to provide structural strength for battery casings and internal battery structures3. These fibres have a fortunate combination of features including strength, flexibility and hardness that makes them useful for protective shielding. One particularly helpful attribute is their ability to block dendrites from growing between the electrodes. Moreover, aramid offers an environmental advantage — it can be made from recycled Kevlar, a strong, lightweight material, and when the batteries are worn out, the fibres can be recycled for further uses.

Energy beyond batteries

By 2030, Dahiya expects the development of energy sources for mobile robotics to broaden well beyond batteries. Some of these concepts have their roots in biology.

One example is equipping robots that operate at remote sites with energy harvesters, to collect energy from their local environment to top up their stored energy5. Robots can collect energy in the form of radio waves or sunlight, or from a thermal gradient. However, energy harvesting is not as efficient as heat pumps or wireless chargers, but it can operate in any suitable environment without special charging equipment. And there have been demonstrations of tiny bacterial-driven microbial fuel cells, or ‘biobatteries’, that could harvest material from the local biota to provide supplemental power6.

Another concept borrowed from biology is distributing energy in various ways through the robot’s body rather than concentrating it in a single battery backpack as used on some experimental humanoid robots5. Humans have three types of energy storage: triglycerides in fat cells, glycogen clusters around muscles and ATP that’s produced by mitochondria. Those systems evolved to serve different energy needs. “Humans and animals require fast energy and slow energy,” says Kotov. They need the fast energy to sprint, as well as slow energy to walk for many kilometres.

Robots and drones likewise have different needs at different times. A humanoid robot needs fast energy to lift a heavy load or run up stairs, and slower energy to patrol a field or a car park. Batteries are fine for a steady walk or jog, but not for a sprint. This gap has led to an interest in equipping robots with a different type of device — a supercapacitor — that delivers electrical energy much faster. Instead of using chemistry to store energy, a supercapacitor stores an electrical charge that it collects over a period of time from an electrical circuit. When a burst of energy is needed, the system discharges the stored electrons extremely quickly. Supercapacitors are used in regenerative braking systems in vehicles and can withstand many more charge–discharge cycles than can batteries7. In the future, they could give mobile robots a quick start — or a quick stop.

The lithium-ion generation of batteries put smartphones in our pockets and electric cars on our roads. Researchers are now in the early stages of developing a generation of portable energy sources that will be lighter, more efficient and potentially cheaper.

Yet costs might still be troublesome, cautions materials chemist Donald Sadoway at the Massachusetts Institute of Technology in Cambridge. Sadoway, who focuses on energy-storage technologies, sees little interest in “new battery chemistries whose price-to-performance ratio is less favourable than that of today’s lithium-ion”. It is unclear, he says, whether “the commercial opportunity is large enough to attract the investment needed for the requisite research and development to invent the new technology and to bring it to market”.

The prospects look bright to material scientist Shirley Meng, who is chief scientist at the Argonne Collaborative Center for Energy Storage Science at Argonne National Laboratory. She says using the oxygen from the air as a cathode is “the ultimate dream of battery scientists” as it offers high energy with light weight. “Good progress has been made” on the lithium-air battery, on which Argonne collaborated, but she says “we still face a lot of challenges in understanding and overcoming the limiting factors in enabling air cathodes”.

Meng predicts that the sodium battery, free of elements such as lithium, nickel and cobalt, “will find its niche to shine” because of its high ratio of performance to cost. Solid-state batteries, “offer the possibility of achieving the highest volumetric energy density in robotic applications [in which] space is limited”, she says. They also offer unique flexibility in packaging and can operate at extreme temperatures, which is important for some special-purpose robots. Meng is optimistic that developers “will offer a wide variety of battery solutions to different types of robot application”, with the potential “to unlock applications that were previously not possible”.

doi: https://doi.org/10.1038/d41586-023-02170-y

This article is part of Nature Outlook: Robotics and artificial intelligence, an editorially independent supplement produced with the financial support of third parties. About this content.

References

- Duffner, F., Kronemeyer, N., Tübke, J., Leker, J., Winter, M. & Schmuch, R. Nature Energy 6, 123–134 (2021).Article Google Scholar

- Kondori, A. et al. Science 379, 499–505 (2023).Article PubMed Google Scholar

- Wang, M. et al. Sci. Robot. 5, eaba1912 (2020).Article PubMed Google Scholar

- Pang, Q. et al. Nature 608, 704–711 (2022).Article PubMed Google Scholar

- Mukherjee, R., Ganguly, P. & Dahiya, R. Adv. Intell. Syst. 5, 2100036 (2023).Article Google Scholar

- Gao, Y., Mohammadifar, M. & Choi, S. Adv. Mater. Technol. 4, 1900079 (2019).Article Google Scholar

- Partridge, J. & Ibrahim Abouelamaimen, D. Energies 12, 2683 (2019).

INTRODUCTION / ABSTRACT:

Given the scale of the net zero challenge, banks and financial institutions, in particular, are in a position to play a critical role in accelerating Acheter cialis en ligne france the transition to a low carbon economy by financing activities aligned with the Paris Agreement. In addition, the financial sector has a vital role to play, especially in catalysing and mobilising capital to where it matters most, whether by creating new customised financing solutions that help with achieving environmental objectives or through thought leadership.

ISSUE AT STAKE:

It is no longer surprising that climate change is humanity’s greatest threat, especially in emerging and developing markets facing some of the most significant risks. If we are to deliver on the Paris Agreement and limit global warming to below 1.5°C by 2050 – the world must act now.

We have seen how the COVID-19 crisis was a wake-up call on the threats triggered by humanity’s impact on nature and the necessity to integrate these threats into business risk management processes. This is why, today, governments and industry movers are paying increased attention to the risks of inaction on climate change.

In Africa and the Middle East, climate change is especially critical as the region is amongst the most vulnerable regions to global warming, and most in need of funding to reduce its reliance on fossil fuels. We must ensure that the rapid ongoing economic development of our region can continue by tapping sources of clean energy such gas and solar, which the region has in abundance. This is why sustainable finance is crucial to delivering a green transition and supporting adaptation to protect against the effects of climate change that are already being felt.

SOLUTIONS:

A significant investment gap must be closed to accelerate the pace of transition in these markets. Emerging markets (of which Africa represents a large proportion) need to invest an additional USD94.8 trillion – a sum higher than annual global GDP – to transition to net zero to meet long-term global warming targets. As a matter of fact Standard Chartered’s recently issued report, the Adaptation Economy, investigates the need for climate adaptation investment in 10 markets – including five markets in Africa and the Middle East. It reveals that without investing a minimum of USD30 billion in adaptation by 2030, these markets could face projected damages and lost GDP growth of USD377 billion: over 12 times that amount. If the countries were to fund it themselves, it could reduce household consumption by an estimated 5% p.a.

This would be an especially unfair burden, given the region’s relatively low contribution to global emissions. On the other hand, if funded by public and private capital from developed countries, GDP could be higher by 3.1% in emerging markets and 2% worldwide. A dollar invested can have a significantly different impact depending on where and how it is deployed. It is with clients in emerging and developing markets that sustainable investment can have the most significant impact.

Additionally, the financial sector can be instrumental in financing developing countries’ ability to deploy sustainable solutions. Innovative financing models, such as blended finance, which can be utilised as crucial vehicles to incentivise and catalyse the private sector contribution needed to bridge this funding gap. Blended finance allows private sector capital to be funnelled towards projects of societal benefit. It also offers a valuable opportunity to attract philanthropic funds to participate in these structures. Short-term financing, although necessary, will not suffice entirely. Banks and Financial Institutions must also integrate sustainability considerations into their financing strategies and play a hands-on role in facilitating financing for sustainable projects.

At Standard Chartered, we are committed to supporting the growth of the sustainable finance market. We have announced ambitious new targets to reach net zero carbon emissions from our financing activities by 2050, including committing to interim 2030 targets for the most carbon intensive sectors. The Group’s approach is based on the best available data and aligns with the International Energy Agency’s Net Zero Emissions by 2050 scenario (NZE).

CONCLUSION / CALL TO ACTION:

To mitigate the climate crisis and minimise its threats to the world, we must be just, leaving no nation, region or community behind and, despite the hurdles, action needs to be swift. To meet the 2050 goal, we must act now and work together: companies, consumers, governments, regulators and the finance industry must collaborate to develop sustainable solutions and provide financing to speed up the adaptation of these solutions.

This article was authored by Liam Drew and originally published to Nature

Markus Möllmann-Bohle’s left cheek hides a secret that has changed his life. Under the skin, nestled among the nerve fibres that allow him to feel and move his face, is a miniature radio receiver and six tiny electrodes. “I’m a cyborg,” he says, with a chuckle.

This electronic device lies dormant much of the time. But, when Möllmann-Bohle feels pressure starting to gather around his left eye, he retrieves a black plastic wand about the size of a mobile phone, pushes a button and fixes it against his face in a home-made sling. The remote vibrates for a moment, then launches high-frequency radio waves into his cheek.

In response, the implant fires a sequence of electrical pulses into a bundle of nerve cells called the sphenopalatine ganglion. By disrupting these neurons, the device spares 57-year-old Möllmann-Bohle the worst of the agonizing cluster headaches that have plagued him for decades. He uses the implant several times a day. “I need this device to live a good life,” he says.

Cluster headaches are rare, but extraordinarily painful. People are typically affected for life and treatment options are very limited. Möllmann-Bohle experienced his first in 1987 at the age of 22. For decades, he managed sporadic headaches with a mix of painkillers and migraine medication. But in 2006, his condition became chronic, and he would be struck with as many as eight hour-long cluster headaches every day. “I was forced to succumb to the pain again and again,” he says. “I was kept from living my life.”

“I was forced to succumb to the pain again and again. I was kept from living my life.”

Möllmann-Bohle, evermore reliant on painkillers and now also taking antidepressants, was hospitalized numerous times. During one of these stays, however, he heard about an electronic implant that some people had started using to control their cluster headaches.

Developed by the start-up Autonomic Technologies (known as ATI) in San Francisco, California, the device had passed a series of placebo-controlled clinical trials with flying colours. “It worked remarkably well,” says Arne May, a neurologist at the University of Hamburg in Germany who led some of those trials on behalf of the start-up. In most people, stimulation reduced the pain of an attack, made attacks less frequent, or both1. Side effects were rare. In February 2012, while US trials continued, the European Medicines Agency granted the company approval to market the device across Europe.

Möllmann-Bohle contacted May, and travelled from his home near Düsseldorf, Germany, to meet him. Filled with hope that this might alleviate his suffering, Möllmann-Bohle underwent surgery to have the device fitted in 2013.

The implant was a revelation. After the pattern and strength of the stimulation had been tailored to Möllmann-Bohle’s needs, around an hour’s use five or six times a day was enough to prevent attacks from becoming debilitating. “I was reborn,” he says.

But, by the end of 2019, ATI had collapsed. The company’s closure left Möllmann-Bohle and more than 700 other people alone with a complex implanted medical device. People using the stimulator and their physicians could no longer access the proprietary software needed to recalibrate the device and maintain its effectiveness. Möllmann-Bohle and his fellow users now faced the prospect of the battery in the hand-held remote wearing out, robbing them of the relief that they had found. “I was left standing in the rain,” Möllmann-Bohle says.

A systemic problem

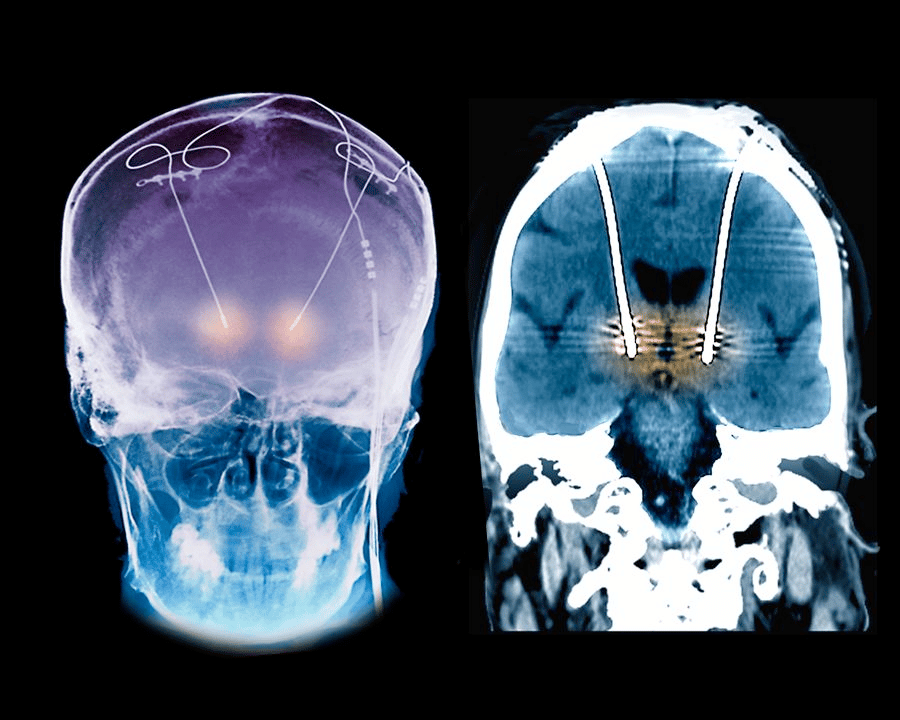

Hundreds of thousands of people benefit from implanted neurotechnology every day. Among the most common devices are spinal-cord stimulators, first commercialized in 1968, that help to ease chronic pain. Cochlear implants that provide a sense of hearing, and deep-brain stimulation (DBS) systems that quell the debilitating tremor of Parkinson’s disease, are also established therapies.

Encouraged by these successes, and buoyed by advances in computing and engineering, researchers are trying to develop evermore sophisticated devices for numerous other neurological and psychiatric conditions. Rather than simply stimulating the brain, spinal cord or peripheral nerves, some devices now monitor and respond to neural activity.

For example, in 2013, the US Food and Drug Administration approved a closed-loop system for people with epilepsy. The device detects signs of neural activity that could indicate a seizure and stimulates the brain to suppress it. Some researchers are aiming to treat depression by creating analogous devices that can track signals related to mood. And systems that allow people who have quadriplegia to control computers and prosthetic limbs using only their thoughts are also in development and attracting substantial funding.

The market for neurotechnology is predicted to expand by around 75% by 2026, to US$17.1 billion. But as commercial investment grows, so too do the instances of neurotechnology companies giving up on products or going out of business, abandoning the people who have come to depend on their device.

Shortly after the demise of ATI, a company called Nuvectra, which was based in Plano, Texas, filed for bankruptcy in 2019. Its device — a new kind of spinal-cord stimulator for chronic pain — had been implanted in at least 3,000 people. In 2020, artificial-vision company Second Sight, in Sylmar, California, laid off most of its workforce, ending support for the 350 or so people who were using its much heralded retinal implant to see. And in June, another manufacturer of spinal-cord stimulators — Stimwave in Pompano Beach, Florida — filed for bankruptcy. The firm has been bought by a credit-management company and is now embroiled in a legal battle with its former chief executive. Thousands of people with the stimulator, and their physicians, are watching on in the hope that the company will continue to operate.

When the makers of implanted devices go under, the implants themselves are typically left in place — surgery to remove them is often too expensive or risky, or simply deemed unnecessary. But without ongoing technical support from the manufacturer, it is only a matter of time before the programming needs to be adjusted or a snagged wire or depleted battery renders the implant unusable.

People are then left searching for another way to manage their condition, but with the added difficulty of a non-functional implant that can be an obstacle both to medical imaging and future implants. For some people, including Möllmann-Bohle, no clear alternative exists.

“It’s a systemic problem,” says Jennifer French, executive director of Neurotech Network, a patient advocacy and support organization in St. Petersburg, Florida. “It goes all the way back to clinical trials, and I don’t think it’s received enough attention.”

As money pours into the neurotechnology sector, implant recipients, physicians, biomedical engineers and medical ethicists are all calling for action to protect people with neural implants. “Unfortunately, with that kind of investment, come failures,” says Gabriel Lázaro-Muñoz, an ethicist specializing in neurotechnology at Harvard Medical School in Boston, Massachusetts. “We need to figure out a way to minimize the harms that patients will endure because of these failures.”

Left to their own devices

When Möllmann-Bohle had the ATI-made neurostimulator implanted to help with his cluster headaches, he agreed to participate in a five-year post-approval trial aimed at refining the device. He diligently provided ATI with data from his device and answered questionnaires about his progress. Every few months he made an 800-kilometre round trip to Hamburg to be assessed.

But four years in, the company running the trial on behalf of ATI called Möllmann-Bohle to tell him it was over. Rumours spread that the firm was in trouble, before a letter from May confirmed his fears — ATI had gone out of business.

Timothy White, another recipient of the company’s stimulator who took part in the post-approval trial, also heard of ATI’s closure second-hand.

Now head of clinical affairs for a medical-device company based near Frankfurt, White credits the device with allowing him to complete his medical training. Indeed, ATI had seized on this eloquent medical student’s enthusiasm for its technology and asked him to speak at conferences and to investors.

Yet even White heard about the company’s collapse only when he contacted May with concerns that his remote control might be under-performing.

“That was really rough for me,” says White. “I was asking myself, what’s going to happen if I lose my remote control, if it breaks down, or the battery dies. But no one really had answers.”

When an implant manufacturer disappears, what happens to the people using its devices varies hugely.

In some cases, there will be alternatives available. When Nuvectra folded, for example, users of its spinal-cord stimulator who feared a resurgence of their chronic pain could turn to similar devices offered by more established companies.

Even this best-case scenario puts considerable strain on the people using the implants, many of whom are already vulnerable, says anaesthesiologist Anjum Bux. He estimates that around 70 people received the Nuvectra device at his pain-management clinics in Kentucky.

Replacing obsolete implants of this kind requires surgery that would otherwise have been unnecessary and takes weeks to recover from. And at around US$40,000 for the surgery and replacement device, it’s also costly — although Bux says that in his experience, insurance providers have picked up the tab.

A greater challenge arises when no ready replacement is available. The stimulator made by ATI that Möllmann-Bohle and White have was the first of its kind. When the manufacturer closed its doors, there was no other implant on the market that they could use to manage their cluster headaches.

Left to fend for themselves, White and Möllmann-Bohle each leant on their own professional expertise. White drew on his medical training and found a drug, developed for treating migraines, that suppresses his headaches. But he must take triple the recommended dose, and worries about potential long-term side effects.

Möllmann-Bohle, meanwhile, turned to skills he developed as an electrical engineer. In the past three years, he has repaired a faulty charging port on the hand-held portion of his device and replaced its inbuilt battery several times. This battery was never intended to be accessible to the user, and it turned out to be unusual. Möllmann-Bohle scoured the Internet and eventually found suitable replacements made by a firm in the United States. When he returned for more, however, he learnt that the company had stopped making them. His most recent replacement came from a Chinese company that custom made what he needed.

His tinkering brought him into conflict with his insurers, who initially advised him not to tamper with the device, but eventually agreed to foot the bill for the replacement parts, after he convinced them he was suitably qualified. “They put really big obstacles in my way, or at least they tried to,” Möllmann-Bohle says. But although his repairs have been successful so far, he knows that he does not have the tools or skills to fix everything that could go wrong.

Although maintaining the device has been tough, Möllmann-Bohle cannot see an alternative. “There is still no medication reliable enough to help me live a pain-free life without the device,” he says.

He and White are now placing much of their hope in the potential revival of ATI’s stimulator technology. In late 2020, a company now called Realeve, based in Effingham, Illinois, announced that it had acquired the patents for the device. The new company intends to market an essentially identical successor device in both the United States and Europe. In April 2021, Realeve attained FDA breakthrough status, which is intended to speed up access to medical devices in the United States.

Möllmann-Bohle and White both approached Realeve earlier this year, and corresponded with then-chief executive Jon Snyder directly, asking for assistance with their implants. So far, they have received none. In an e-mail to Nature in July, Snyder said: “Since we do not have FDA or CE mark approval yet, we are unable to market the therapy and provide support. However, we have investigated the options of providing support via compassionate use approvals in various markets.”

Möllmann-Bohle desperately wants this support to materialize. “He [Snyder] assured me that he and his staff are working on providing replacement parts,” he says. There have been changes at Realeve in recent months, with Snyder departing and a consulting firm taking temporary control of the business. But interim chief executive Peter Donato says that the company has now gained approval in Denmark to distribute replacement devices and software to existing users. He hopes that it can begin deliveries in the latter half of 2023, and says that it is also in talks with three other European countries. For Möllmann-Bohle and others in Germany, the wait goes on. “This new start has been in the making for years now,” he says.

“This new start has been in the making for years now. I’m hopeful, but I’m also a realist.”

A commitment to care

Examples of makers supporting implanted neurotechnology when profits fail to materialize are few and far between. French can therefore consider herself one of the lucky ones.

As well as being a prominent advocate for neurotechnology, she has been using an implanted device to help her move for more than 20 years — even though the life-changing technology never became the foundation of a viable business.

In 1999, two years after a snowboarding accident left her unable to move her legs, French enrolled in a clinical trial of an electrical implant system designed by Ronald Triolo, a biomedical engineer at Case Western Reserve University in Cleveland, Ohio.

Over seven and a half hours, surgeons placed 16 electrodes in her body, each of which could stimulate a nerve that runs to her leg muscles. These electrodes were connected to an implanted pulse generator, which is wirelessly powered and controlled by an external unit.

Initially, the implant allowed French to stand and move herself between her wheelchair and a bed or a car. Over time, more electrodes and controllers have been added. Now she can stand and step, and pedal a stationary bike. “I use it on a daily basis for exercise, for standing, for function,” she says.

Although the device was not commercially available at the time French joined the trial, Triolo expected it wouldn’t be long before it was — a similar system developed at Case Western for restoring functional hand and arm movement, known as Freehand, had been brought to market by a local start-up in 1997.

But this did not come to pass. Despite the difference it has made to French’s life, the device she uses has never been commercialized. The company that had acquired the rights to the Freehand system shuttered in 2001, and no other company picked up the device. Freehand’s developer, biomedical engineer Hunter Peckham also at Case Western, attributes the start-up’s failure to impatient investors. “The uptake was not as fast as they would have liked,” he says.

Around 350 people with Freehand devices, as well as French and her fellow participants in Triolo’s lower-body implant trial, could have lost access to the technology that had become an integral part of their lives. But Peckham and Triolo refused to let this happen.

“We understood that if there was something that they were benefiting from, if you took that away that would be another loss for them — when they had had such a devastating loss before,” Peckham says.

Using old and dwindling stocks of components — including items that the university had acquired after the demise of the Freehand manufacturer — and tapping into money from academic grants, the researchers continue to support as many people with these devices as they can.

Over two decades, the Freehand devices have been repaired as they gradually failed, and funding for a succession of further fixed-term clinical trials has allowed Triolo to continue to support French and her fellow research participants. He has even been able to offer them upgrades over time. French’s system has failed four times, leaving her unable to stand and acutely aware of her reliance on the technology. Every time, the Case Western team has provided the surgery and parts required to restore her movement.

“Someone is dedicating their body to our research. We have an obligation to maintain their systems for as long as they want to use them.”

French knows her situation is precarious and that it rests on Triolo continuing to attract funding. “I live every day with the fact that this technology might go away,” she says. But she takes heart in what she sees as the researchers’ unwavering commitment to her.

“Our world view,” Triolo says, “is someone is dedicating their body to help advance our research, and we have an obligation to them to maintain their systems for as long as they want to use them.”

Protection from failure

Konstantin Slavin is a neurosurgeon at the University of Illinois College of Medicine in Chicago, who contributed to clinical trials of ATI’s cluster-headache device and implanted the spinal-cord stimulator made by Nuvectra. He thinks that anyone given an implanted device as a part of routine clinical care should be able to count on ongoing support. “You expect them to receive essentially lifelong care from the device manufacturer,” he says.

He is not alone in this view — every device user, physician and engineer Nature interviewed thinks that people need to be better protected from the failure of device makers.

“You expect them to receive essentially lifelong care from the device manufacturer.”

One proposal is that neurotechnology companies should ensure that there is money available to support the people using their devices in the event of the company’s closure. How this would best be achieved is uncertain. Suggestions include the company setting up a partner non-profit organization to manage funds to cover this eventuality; putting aside money in an escrow account; being obliged to take out an insurance policy that would support users; paying into a government-supported safety network; or ensuring the people using the devices are high-priority creditors during bankruptcy proceedings.

Currently, there is little sign that device makers are taking this kind of action. Asked in July if Realeve had plans in place to protect people should its business go the same way as ATI, Snyder, then chief executive, replied: “There is always the risk that a company may stop operating, but our focus is to be successful in our effort to deliver the Realeve Pulsante therapy to patients”.

Realeve’s interim chief executive Donato thinks that it will take legislation to convince investors or shareholders in companies to take on the expense of a safety net. “Unless, and until, the governments force it on us,” he says, “I’m not sure companies will do it on their own.” But Triolo is optimistic that manufacturers might think differently if the jeopardy faced by device users becomes more widely known, and physicians and prospective patients start to favour companies that do have a safety net in place. “If that is what it takes to have a competitive advantage, maybe that’ll be enlightening for our friends on the commercial side of things,” Triolo says.

Indeed, the failures of various neurotechnology start-ups over the past few years are already causing the surgeons responsible for implanting the devices to be cautious.

Robert Levy, a neurosurgeon in Boca Raton, Florida, and a former president of the International Neuromodulation Society, was particularly burnt by the demise of Nuvectra. He had been sufficiently impressed with its technology to become chairman of the company’s medical advisory board in August 2016. But in 2019, around five months before Nuvectra filed for bankruptcy, he cut ties after what he and others formerly associated with the firm saw as the company side-lining the needs of people using the implant in its attempt to stay afloat. “All of us who had any association with the company at that time expressed our severe dissatisfaction with such a move, which we felt was unethical,” Levy says.

“Making patients the victims of bad business practices or a bankruptcy is horrible for them, horrible for the field, and grossly unethical.”

From now on, Levy requires any new company that asks him to implant its product to send him a letter guaranteeing support for the people who have the surgery should something happen to the business. “If they should not supply such a letter, they’re not going to be included in my practice,” he says.

He plans to write an editorial arguing for this approach in the journal Neuromodulation, of which he is editor-in-chief, to further raise awareness and put pressure on neurotechnology companies. “Patients are suffering terribly,” he says. “Making them the victims of bad business practices or a bankruptcy is horrible for patients, horrible for the field and grossly unethical.”

Momentum is also building behind another way to protect people with implants: technical standardization. The electrodes, connectors, programmable circuits and power supplies used in implanted neurotechnology are often proprietary or otherwise difficult to source, as Möllmann-Bohle discovered when looking for replacement parts for his stimulator. If components were common across devices, one manufacturer might be able to step in and offer spares when another goes under.

A 2021 survey of surgeons who implant neurostimulators showed that 86% backed standardization of the connectors used by these devices2. Such a move would not be without precedent, says retired neurosurgeon and medical-device engineer Richard North, formerly at Johns Hopkins Medical School in Baltimore, and president of the Institute of Neuromodulation in Chicago, who led the survey. Cardiac pacemakers have included standardized elements since the early 1990s, when manufacturers voluntarily agreed to ensure that any company’s power supply could fuel a pacemaker from any other company. Many of those same companies are now the biggest names in spinal-cord stimulators and DBS systems.

“It’s inevitable that there will be standardization, and I think the companies involved recognize that too.”

North now co-chairs a Connector Standards Committee for the North American Neuromodulation Society, of which the Institute of Neuromodulation is a part, that is promoting the idea. Although the industry has not raced to embrace further standardization, he thinks it is only a matter of time. “It’s inevitable that there will be standardization, and I think the companies involved recognize that too,” he says. As well as making replacement components easier to come by, North thinks that standardization would boost innovation by encouraging companies to develop components that can be used with a wide range of existing systems.

Peckham hopes that the neurotechnology field can go even further — he wants devices to be made open source. Under the auspices of the Institute for Functional Restoration, a non-profit organization that he and his colleagues at Case Western established in 2013, Peckham plans to make the design specifications and supporting documentation of new implantable technologies developed by his team freely available. “Then people can just cut and paste,” he says.

This marks a major departure from the proprietary nature of most current devices. Peckham hopes that other people will build on the technology, and potentially even adapt it for new indications. The benefits for the people using these devices are at the centre of his thinking. “It starts with a commitment to the patients, to the people who can benefit from this,” he says.

It is exactly that sort of commitment that people such as Möllmann-Bohle, White and French want to see — and which they think they are entitled to. A raft of new companies are developing evermore sophisticated neurological implants with the power to transform people’s lives. Should any fail, it is the people using the devices, and their physicians, who will be most affected, says Triolo.

The recent run of commercial casualties demonstrates the human cost of abandoning neurotechnology. “It’s impossible,” Triolo says, “for people not to know that this is becoming a bigger and bigger issue.”

References

- J. Schoenen et al. Cephalalgia 33, 816–830 (2013). Article

- R. B. North et al. Neuromodulation 24, 1299–1306 (2021). Article

Author: Liam Drew

Design: Chris Ryan

Video: Josh Birt, Colin Kelly, Adam Levy

Original photography: Nyani Quarmyne

Audio: Adam Levy

Multimedia editors: Adam Levy, Dan Fox

Photo editors: Jessica Hallett, Madeline Hutchinson

Translation: Shaya Zarrin

Subeditor: Jenny McCarthy

Project manager: Rebecca Jones

Editor: Richard Hodson

This article was authored by Neil Savage and originally published to Nature

Inspiration can come from anywhere. For Radhika Nagpal, it came from her honeymoon.

Nagpal was snorkelling in the Bahamas when she was approached by a school of colourful striped fish, moving as one. “They come straight at you and check you out and then move off,” says Nagpal, now a mechanical engineer at Princeton University in New Jersey. “I was like, ‘Wow, that is a collective behaviour that I’ve never seen.’”Part of Nature Outlook: Robotics and artificial intelligence

Her mind returned to those curious fish years later, when she was pondering ways to build swarms of robots that could coordinate their behaviour in challenging environments. The result is a school of robotic fish — called Bluebots — that can coordinate their activity with their fellows1.

Nagpal’s school is small, only ten fish with limited abilities. The fish are equipped with blue LEDs so that their comrades can spot them underwater. Simple rules in their programming, such as swimming to the left when they see another Bluebot, enable them to synchronize their movement. But Nagpal hopes to eventually build larger collectives with more complex behaviours.

Such robotic schools could be tasked with locating and recording data on coral reefs to help researchers to study the reefs’ health over time. Just as living fish in a school might engage in different behaviours simultaneously — some mating, some caring for young, others finding food — but suddenly move as one when a predator approaches, robotic fish would have to perform individual tasks while communicating to each other when it’s time to do something different.

“The majority of what my lab really looks at is the coordination techniques — what kinds of algorithms have evolved in nature to make systems work well together?” she says.

Many roboticists are looking to biology for inspiration in robot design, particularly in the area of locomotion. Although big industrial robots in vehicle factories, for instance, remain anchored in place, other robots will be more useful if they can move through the world, performing different tasks and coordinating their behaviour.

Some robots can already move on wheels, but wheeled robots cannot climb stairs and are stymied by rough or shifting terrain, such as sand or gravel. By borrowing movement strategies from nature — walking, crawling, swimming, slithering, flying or leaping — robots could gain new functionality. They might perform search-and-rescue operations after an earthquake, or explore caves that are too small or unstable for people to venture into. They could carry out underwater inspections of ships and bridges. And unmanned aerial vehicles (UAVs) could fly more efficiently and better handle turbulence.

“The basic idea is looking to nature to see how things can potentially be done differently, how we can improve our automated systems,” says Michael Tolley, a mechanical engineer who heads the Bioinspired Robotics and Design Lab at the University of California, San Diego.

See Spot run

Perhaps the most obvious strategy for robotic motion is walking, and legged robots do exist. Spot, a low-slung, four-legged machine that looks like a headless yellow dog, can climb uphill and navigate stairs. Its developer, Boston Dynamics in Waltham, Massachusetts, markets the US$74,500 device for mobile inspection of factories, construction sites and hazardous environments. A similar-looking robot, the Mini Cheetah, has been developed at the Massachusetts Institute of Technology (MIT) in Cambridge. “More than 90% of land animals are quadruped,” says Sangbae Kim, a mechanical engineer at MIT who helped to design the Mini Cheetah. “So a natural place to look at is the quadrupedal world. And the cheetah is a king of that world in terms of the speed.”Sign up for Nature’s newsletter on robotics and AI

The Mini Cheetah can already perform backflips, and it runs as fast as 3.9 metres per second — about one-tenth as fast as an actual cheetah, but speedy for a robot. Now Kim is developing control software that he hopes will allow the robot to move smoothly across varying surfaces. This is challenging because the rules for how best to move a limb vary depending on the friction and hardness of the surface. Currently, moving from grass to concrete, or running up a gravelly hill, can cause the robot to stumble. “It runs really ugly and awkward,” Kim says. “It doesn’t fall, but it’s not efficient.”

Nevertheless, quadruped robots are one of the better options for negotiating difficult terrain, says J. Sean Humbert, a mechanical engineer who directs the Bio-Inspired Perception and Robotics Laboratory at the University of Colorado, Boulder. Last year, his group took part in the US Defense Advanced Research Projects Agency’s Subterranean Challenge, in which robots were tasked with navigating tunnels, caves and urban settings to find particular targets; the team took third place, winning $500,000. “The robots that ended up doing really well across the teams were the legged robots,” Humbert says. But faced with a sandy, uphill, rocky landscape, these robots struggled. “Even our Spot robot tipped over and slid around,” he says.

Feel the strain

One possible solution, Humbert says, is to endow robots with animals’ innate ability to sense and respond to mechanosensory information, such as pressure, strain or vibration. He’s been taking that approach with flying machines by embedding strain sensors in the wings of fixed-wing UAVs, as well as in the arms of quadrotor drones, which rely on spinning blades to fly and hover.

The work grew out of studies of honey bees. When Humbert placed bees in a wind tunnel and hit them with sudden gusts of air, their flight would be momentarily disturbed. After a quick change in the pattern of their wing beats, they would right themselves. Honey bees beat their wings 251 times per second, and the animals could make these corrections in just 15 to 20 beats — about 0.08 seconds. “Our conclusion was that [that] had to be mechanosensory information,” Humbert says. “Vision is just not fast enough to correct the spins that we’re seeing.” If a drone could similarly sense a disturbance and automatically correct for it that rapidly, he says, it would be much less likely to crash or be knocked off course.

Fish also respond to mechanosensory stimuli, using a system of sensory organs known as the lateral line. The structure consists of hundreds of tiny sensors spread along the head, trunk and tail fin, and it enables fish to sense changes in the motion and pressure of water caused by obstacles, such as rocks and other animals. “Fish are sensing all of that and are using that, as well as vision, to position themselves relative to each other,” Nagpal says. No comparable underwater pressure sensor exists, but her team hopes to develop one to improve the Bluebots’ navigation.

In San Diego, Tolley is exploring robots built from polymers or other pliable materials that can more safely interact with humans or squeeze through tight spaces. Squishy, pliable robots could have more flexible motion than hard robots with only a few joints, but getting them to walk on soft legs is a challenge.

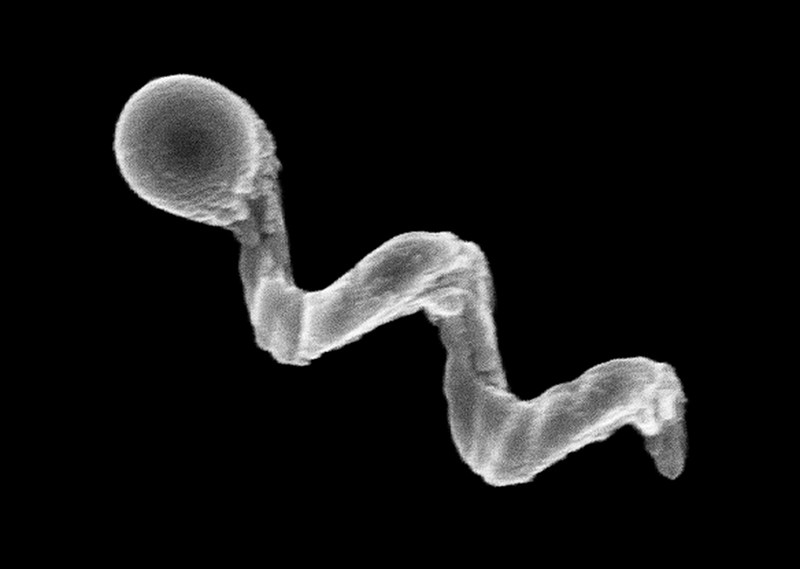

Tolley designed a robot with four soft legs, each divided into three chambers2. Pressurized air first enters one chamber, then moves to the next. This movement causes the legs to bend, then relax. By alternatively activating opposing pairs of legs, the robot trundles along like a turtle. And because it does not need electronic controls, its design could be useful even in the presence of electromagnetic interference.

Hard or soft, one issue robots struggle with is falling over. If a multimillion-dollar robot trips over a rock on Mars, an entire mission could be jeopardized. Some researchers are looking to insects for solutions, particularly click beetles, which can jump up to 20 times their body length without using their legs3.

Click beetles use a muscle to compress soft tissue, building up energy; a latch system holds the compressed tissue in place. When the animal releases the latch, producing its characteristic clicking sound, the tissue expands rapidly and the beetle is launched into the air, accelerating at about 530 times the force of gravity. (By comparison, a rider on a roller coaster typically experiences about four times the force of gravity.) If a robot could do that, it would have a mechanism for righting itself after tipping over, says Aimy Wissa, a mechanical and aerospace engineer who runs the Bio-inspired Adaptive Morphology Lab at Princeton.

Even more interesting, Wissa says, is that the beetle can perform this manoeuvre four or five times in rapid succession, without suffering any apparent damage. She’s trying to develop models that explain how the energy is rapidly dissipated without harming the insect, which could prove useful in applications involving rapid acceleration and deceleration, such as bulletproof vests. Other creatures also store and release energy to trigger rapid motion, including fruit-fly larvae and Venus flytraps (Dionaea muscipula), and understanding how they do so could lead to more-responsive artificial muscles, Tolley says.

Totally legless

In some places, such as narrow underground passages or on unstable surfaces, legs could require too much space or be too unstable to propel a robot. Howie Choset, a computer scientist at the Robotics Institute of Carnegie Mellon University in Pittsburgh, Pennsylvania, builds snake-like robots with 16 joints that provide a range of motion that could drive everything from surgical instruments wending through the body to reconnaissance robots exploring archaeological sites.

In one early project, Choset took his robo-snakes to the Red Sea, where ancient Egyptians had dug caves to store boats that they’d built for trade with the Land of Punt, thought to be located in modern Somalia. The caves were no longer safe for human explorers, but snake robots seemed well suited to the task — until they didn’t. “The truth is, we got stuck,” Choset says. “We couldn’t go up and down the sandy inclines.”

To work out how a real snake would approach the problem, Choset looked to sidewinders, snakes that move by thrusting their bodies sideways in an S-shaped curve, gliding easily over sand4. Because sand is granular, it can behave as either a liquid or a solid, depending on how much force is applied. Choset found that sidewinders can exert the right amount of pushing force so that the sand remains solid underneath them and supports their bodies. “It wasn’t until we started looking at the real snakes, the sidewinders, and how they moved on sandy terrains that we were able to understand how to make our robot work on sandy terrains,” he says.

As for Wissa, she’s trying to build robots that can both swim and fly, using an animal that can do both as inspiration: flying fish5. These creatures use their pelvic fins to skim across the water’s surface and then launch into the air, where they can glide up to 400 metres.

Flying fish, Wissa explains, are “actually very good gliders”. But when they drop back to the water, they don’t submerge. “They actually just dip their caudal fin and they flap it vigorously, and then they can take off again,” Wissa says. “You can think of it as a taxiing manoeuvre.” She hopes to learn enough about this behaviour to develop a robot that can move through both air and water using the same propulsion mechanisms. “We’re very good as engineers in designing things for a single function,” Wissa says. “Where nature really can teach us a lot of lessons is this concept of multi-functionality.”

For another type of multi-functional locomotion, Wissa focuses on grasshoppers, which can jump and then open their wings to glide. She hopes to understand what makes them such good gliders. Many other insects rely on high-frequency flapping to fly. Perhaps, she says, it has to do with their wing shape.

Wissa also seeks inspiration from birds. She’s used aerodynamic testing and structural modelling to investigate covert feathers — small, stiff feathers that overlap other feathers on a bird’s wings and tail6. When a bird tries to land in windy conditions, the covert feathers on the wings deploy, either passively in response to air flow or actively under control of a tendon. The covert feathers alter the shape of the wing and give the bird finer control over its interaction with air flow, and don’t require as much energy as flapping the whole wing. By learning to understand the physics of these feathers, Wissa hopes to improve the flight of a UAV.

A two-way street

Biology has informed robotics, but the engineering involved can also provide insights into animal kinesiology. “We didn’t start by looking at biology,” Choset says. Instead, he mathematically modelled the fundamental principles of the motion he was interested in. “And in doing so, something kind of magical happened — we started coming up with ways to explain how biology works. So, is it robot-inspired biology or biologically inspired robots?”More from Nature Outlooks

Other engineers have had similar experiences. Nagpal is collaborating with ichthyologist George Lauder at Harvard University in Cambridge to model the hydrodynamics of schooling, to see whether the formation provides living fish with an energy benefit. And designs that make drones fly in a more energy-efficient way might help to explain how birds and insects have evolved to do something similar. Wissa hopes her work, in addition to building flying, swimming robots, will lead to a greater understanding of flying fish. “We’re using this model to actually test hypotheses about nature, about why some species of flying fish have enlarged pelvic fins while others don’t,” Wissa says.

But despite the links between biology and engineering, don’t expect bio-inspired robots to ultimately look like the creatures that influenced them. Wissa says that, although many first attempts at mimicking biology resemble the original biological forms, scientists’ ultimate aim is to understand the principles behind how the systems operate, and then adapt those to different structures and materials. “We’re just copying the physics and the rules for how things work,” she says, “and then making engineering systems that serve the same function.”

doi: https://doi.org/10.1038/d41586-022-03014-x

This article is part of Nature Outlook: Robotics and artificial intelligence, an editorially independent supplement produced with the financial support of third parties. About this content.

References

- Berlinger, F., Gauci, M. & Nagpal, R. Sci. Robot. 6, eabd8668 (2021).Article PubMed Google Scholar

- Drotman, D., Jadhav, S., Sharp, D., Chan, C. & Tolley, M. T. Sci. Robot. 6, eaay2627 (2021).Article PubMed Google Scholar

- Bolmin, O. et al. Proc. Natl Acad. Sci. USA 118, e2014569118 (2021).Article PubMed Google Scholar

- Chaohui Gong, R., Hatton, L. & Choset, H. In 2012 IEEE International Conference on Robotics and Automation 4222–4227 (2012).

- Saro-Cortes, V. et al. Integr. Comp. Biol. https://doi.org/10.1093/icb/icac101 (2022).Article Google Scholar

- Duan, C. & Wissa, A. Bioinspir. Biomim. 16, 046020 (2021).Article Google Scholar

This article was authored by Anthony King and originally published to Nature

Cancer drugs usually take a scattergun approach. Chemotherapies inevitably hit healthy bystander cells while blasting tumours, sparking a slew of side effects. It is also a big ask for an anticancer drug to find and destroy an entire tumour — some are difficult to reach, or hard to penetrate once located.

A long-dreamed-of alternative is to inject a battalion of tiny robots into a person with cancer. These miniature machines could navigate directly to a tumour and smartly deploy a therapeutic payload right where it is needed. “It is very difficult for drugs to penetrate through biological barriers, such as the blood–brain barrier or mucus of the gut, but a microrobot can do that,” says Wei Gao, a medical engineer at the California Institute of Technology in Pasadena.

Part of Nature Outlook: Robotics and artificial intelligence

Among his inspirations is the 1966 film Fantastic Voyage, in which a miniaturized submarine goes on a mission to remove a blood clot in a scientist’s brain, piloted through the bloodstream by a similarly shrunken crew. Although most of the film remains firmly in the realm of science fiction, progress on miniature medical machines in the past ten years has seen experiments move into animals for the first time.

There are now numerous micrometre- and nanometre-scale robots that can propel themselves through biological media, such as the matrix between cells and the contents of the gastrointestinal tract. Some are moved and steered by outside forces, such as magnetic fields and ultrasound. Others are driven by onboard chemical engines, and some are even built on top of bacteria and human cells to take advantage of those cells’ inbuilt ability to get around. Whatever the source of propulsion, it is hoped that these tiny robots will be able to deliver therapies to places that a drug alone might not be able to reach, such as into the centre of solid tumours. However, even as those working on medical nano- and microrobots begin to collaborate more closely with clinicians, it is clear that the technology still has a long way to go on its fantastic journey towards the clinic.

Poetry in motion

One of the key challenges for a robot operating inside the human body is getting around. In Fantastic Voyage, the crew uses blood vessels to move through the body. However, it is here that reality must immediately diverge from fiction. “I love the movie,” says roboticist Bradley Nelson, gesturing to a copy of it in his office at the Swiss Federal Institute of Technology (ETH) Zurich in Switzerland. “But the physics are terrible.” Tiny robots would have severe difficulty swimming against the flow of blood, he says. Instead, they will initially be administered locally, then move towards their targets over short distances.