This article was authored by Anthony King and originally published to Nature

Cancer drugs usually take a scattergun approach. Chemotherapies inevitably hit healthy bystander cells while blasting tumours, sparking a slew of side effects. It is also a big ask for an anticancer drug to find and destroy an entire tumour — some are difficult to reach, or hard to penetrate once located.

A long-dreamed-of alternative is to inject a battalion of tiny robots into a person with cancer. These miniature machines could navigate directly to a tumour and smartly deploy a therapeutic payload right where it is needed. “It is very difficult for drugs to penetrate through biological barriers, such as the blood–brain barrier or mucus of the gut, but a microrobot can do that,” says Wei Gao, a medical engineer at the California Institute of Technology in Pasadena.

Part of Nature Outlook: Robotics and artificial intelligence

Among his inspirations is the 1966 film Fantastic Voyage, in which a miniaturized submarine goes on a mission to remove a blood clot in a scientist’s brain, piloted through the bloodstream by a similarly shrunken crew. Although most of the film remains firmly in the realm of science fiction, progress on miniature medical machines in the past ten years has seen experiments move into animals for the first time.

There are now numerous micrometre- and nanometre-scale robots that can propel themselves through biological media, such as the matrix between cells and the contents of the gastrointestinal tract. Some are moved and steered by outside forces, such as magnetic fields and ultrasound. Others are driven by onboard chemical engines, and some are even built on top of bacteria and human cells to take advantage of those cells’ inbuilt ability to get around. Whatever the source of propulsion, it is hoped that these tiny robots will be able to deliver therapies to places that a drug alone might not be able to reach, such as into the centre of solid tumours. However, even as those working on medical nano- and microrobots begin to collaborate more closely with clinicians, it is clear that the technology still has a long way to go on its fantastic journey towards the clinic.

Poetry in motion

One of the key challenges for a robot operating inside the human body is getting around. In Fantastic Voyage, the crew uses blood vessels to move through the body. However, it is here that reality must immediately diverge from fiction. “I love the movie,” says roboticist Bradley Nelson, gesturing to a copy of it in his office at the Swiss Federal Institute of Technology (ETH) Zurich in Switzerland. “But the physics are terrible.” Tiny robots would have severe difficulty swimming against the flow of blood, he says. Instead, they will initially be administered locally, then move towards their targets over short distances.

When it comes to design, size matters. “Propulsion through biological media becomes a lot easier as you get smaller, as below a micron bots slip between the network of macromolecules,” says Peer Fischer, a robotics researcher at the Max Planck Institute for Intelligent Systems in Stuttgart, Germany. Bots are therefore typically no more than 1–2 micrometres across. However, most do not fall below 300 nanometres. Beyond that size, it becomes more challenging to detect and track them in biological media, as well as more difficult to generate sufficient force to move them.

Scientists have several choices for how to get their bots moving. Some opt to provide power externally. For instance, in 2009, Fischer — who was working at Harvard University in Cambridge, Massachusetts, at the time, alongside fellow nanoroboticist Ambarish Ghosh — devised a glass propeller, just 1–2 micrometres in length, that could be rotated by a magnetic field1. This allowed the structure to move through water, and by adjusting the magnetic field, it could be steered with micrometre precision. In a 2018 study2, Fischer launched a swarm of micropropellers into a pig’s eye in vitro, and had them travel over centimetre distances through the gel-like vitreous humour into the retina — a rare demonstration of propulsion through real tissue. The swarm was able to slip through the network of biopolymers within the vitreous humour thanks in part to a silicone oil and fluorocarbon coating applied to each propeller. Inspired by the slippery surface that the carnivorous pitcher plant Nepenthes uses to catch insects, this minimized interactions between the micropropellers and biopolymers.

Another way to provide propulsion from outside the body is to use ultrasound. One group placed magnetic cores inside the membranes of red blood cells3, which also carried photoreactive compounds and oxygen. The cells’ distinctive biconcave shape and greater density than other blood components allowed them to be propelled using ultrasonic energy, with an external magnetic field acting on the metallic core to provide steering. Once the bots are in position, light can excite the photosensitive compound, which transfers energy to the oxygen and generates reactive oxygen species to damage cancer cells.

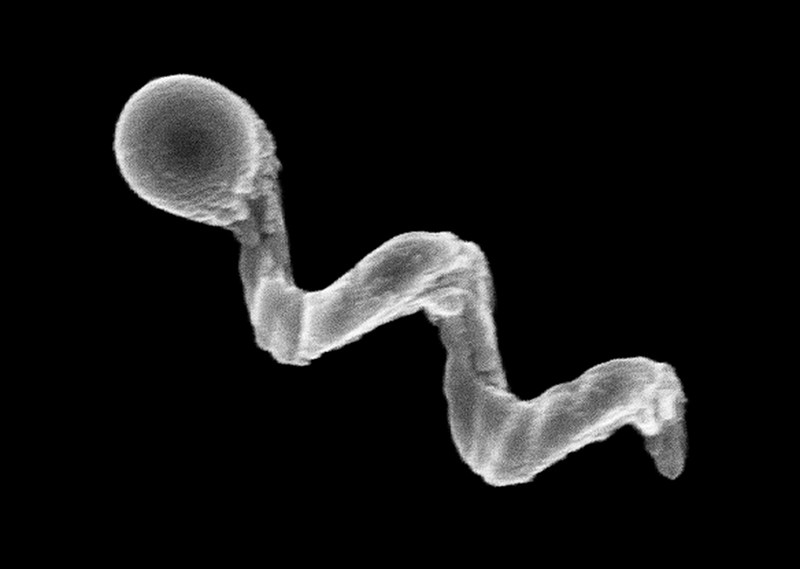

This hijacking of cells is proving to have therapeutic merits in other research projects. Some of the most promising strategies aimed at treating solid tumours involve human cells and other single-celled organisms jazzed up with synthetic parts. In Germany, a group led by Oliver Schmidt, a nanoscientist at Chemnitz University of Technology, has designed a biohybrid robot based on sperm cells4. These are some of the fastest motile cells, capable of hitting speeds of 5 millimetres per minute, Schmidt says. The hope is that these powerful swimmers can be harnessed to deliver drugs to tumours in the female reproductive tract, guided by magnetic fields. Already, it has been shown that they can be magnetically guided to a model tumour in a dish.

Credit: Leibniz IFW, Dresden

“We could load anticancer drugs efficiently into the head of the sperm, into the DNA,” says Schmidt. “Then the sperm can fuse with other cells when it pushes against them.” At the Chinese University of Hong Kong, meanwhile, nanoroboticist Li Zhang led the creation of microswimmers from Spirulina microalgae cloaked in the mineral magnetite. The team then tracked a swarm of them inside rodent stomachs using magnetic resonance imaging5. The biohybrids were shown to selectively target cancer cells. They also gradually degrade, reducing unwanted toxicity.

Another way to get micro- and nanobots moving is to fit them with a chemical engine: a catalyst drives a chemical reaction, creating a gradient on one side of the machine to generate propulsion. Samuel Sánchez, a chemist at the Institute for Bioengineering of Catalonia in Barcelona, Spain, is developing nanomotors driven by chemical reactions for use in treating bladder cancer. Some early devices relied on hydrogen peroxide as a fuel. Its breakdown, promoted by platinum, generated water and oxygen gas bubbles for propulsion. But hydrogen peroxide is toxic to cells even in minuscule amounts, so Sánchez has transitioned towards safer materials. His latest nanomotors are made up of honeycombed silica nanoparticles, tiny gold particles and the enzyme urease6. These 300–400-nm bots are driven forwards by the chemical breakdown of urea in the bladder into carbon dioxide and ammonia, and have been tested in the bladders of mice. “We can now move them and see them inside a living system,” says Sánchez.

Breaking through

A standard treatment for bladder cancer is surgery, followed by immunotherapy in the form of an infusion of a weakened strain of Mycobacterium bovis bacteria into the bladder, to prevent recurrence. The bacterium activates the person’s immune system, and is also the basis of the BCG vaccine for tuberculosis. “The clinicians tell us that this is one of the few things that has not changed over the past 60 years,” says Sánchez. There is a need to improve on BCG in oncology, according to his collaborator, urologic oncologist Antoni Vilaseca at the Hospital Clinic of Barcelona. Current treatments reduce recurrences and progression, “but we have not improved survival”, Vilaseca says. “Our patients are still dying.”

The nanobot approach that Sánchez is trying promises precision delivery. He plans to insert his bots into the bladder (or intravenously), to motor towards the cancer with their cargo of therapeutic agents to target cancer cells, using abundant urea as a fuel. He might use a magnetic field for guidance, if needed, but a more straightforward replacement of BCG with bots that do not require external control, perhaps using an antibody to bind a tumour marker, would please clinicians most. “If we can deliver our treatment to the tumour cells only, then we can reduce side effects and increase activity,” says Vilaseca.

Not all cancers can be reached by swimming through liquid, however. Natural physiological barriers can block efficient drug delivery. The gut wall, for example, allows absorption of nutrients into the bloodstream, and offers an avenue for getting therapies into bodies. “The gastrointestinal tract is the gateway to our body,” says Joseph Wang, a nanoengineer at the University of California, San Diego. However, a combination of cells, microbes and mucus stops many particles from accessing the rest of the body. To deliver some therapies, simply being in the intestine isn’t enough — they also need to be able to burrow through its defences to reach the bloodstream, and a nanomachine could help with this.

In 2015, Wang and his colleagues, including Gao, reported the first self-propelled robot in vivo, inside a mouse stomach7. Their zinc-based nanomotor dissolved in the harsh stomach acids, producing hydrogen bubbles that rocketed the robot forwards. In the lower gastrointestinal tract, they instead use magnesium. “Magnesium reacts with water to give a hydrogen bubble,” says Wang. In either case, the metal micromotors are encapsulated in a coating that dissolves at the right location, freeing the micromotor to propel the bot into the mucous wall.

Some bacteria have already worked out their own ways to sneak through the gut wall. Helicobacter pylori, which causes inflammation in the stomach, excretes urease enzymes to generate ammonia and liquefy the thick mucous that lines the stomach wall. Fischer envisages future micro- and nanorobots borrowing this approach to deliver drugs through the gut.

Sign up for Nature’s newsletter on robotics and AI

Solid tumours are another difficult place to deliver a drug. As these malignancies develop, a ravenous hunger for oxygen promotes an outside surface covered with blood vessels, while an oxygen-deprived core builds up within. Low oxygen levels force cells deep inside to switch to anaerobic metabolism and churn out lactic acid, creating acidic conditions. As the oxygen gradient builds, the tumour becomes increasingly difficult to penetrate. Nanoparticle drugs lack a force with which to muscle through a tumour’s fortifications, and typically less than 2% of them will make it inside8. Proponents of nanomachines think that they can do better.

Sylvain Martel, a nanoroboticist at Montreal Polytechnic in Canada, is trying to break into solid tumours using bacteria that naturally contain a chain of magnetic iron-oxide nanocrystals. In nature, these Magnetococcus species seek regions that have low oxygen. Martel has engineered such a bacterium to target active cancer cells deep inside tumours8. “We guide them with a magnetic field towards the tumour,” explains Martel, taking advantage of the magnetic crystals that the bacteria typically use like a compass for orientation. The precise locations of low-oxygen regions are uncertain even with imaging, but once these bacteria reach the right location, their autonomous capability kicks in and they motor towards low-oxygen regions. In a mouse, more than half the bacteria injected close to tumour grafts broke into this tumour region, each laden with dozens of drug-loaded liposomes. Martel cautions, however, that there is still some way to go before the technology is proven safe and effective for treating people with cancer.

In the Netherlands, chemist Daniela Wilson at Radboud University in Nijmegen and colleagues have developed enzyme-driven nanomotors powered by DNA that might similarly be able to autonomously home in on tumour cells9. The motors navigate towards areas that are richer in DNA, such as tumour cells that undergoing apoptosis. “We want to create systems that are able to sense gradients by different endogenous fuels in the body,” Wilson says, suggesting that the higher levels of lactic acid or glucose typically found in tumours could also be used for targeting. Once in place, the autonomous bots seem to be picked up by cells more easily than passive particles are — perhaps because the bots push against cells.

Fiction versus reality

Inspirational though Fantastic Voyage might have been for many working in the field of medical nanorobotics, there are some who think the film has become a burden. “People think of this as science fiction, which excites people, but on the other hand they don’t take it so seriously,” says Martel. Fischer is similarly jaded by movie-inspired hype. “People sometimes write very liberally as if nanobots for cancer treatment are almost here,” he says. “But this is not even in clinical trials right now.”

Nonetheless, advances in the past ten years have raised expectations of what is possible with current technology. “There’s nothing more fun than building a machine and watching it move. It’s a blast,” says Nelson. But having something wiggling under a microscope no longer has the same draw, without medical context. “You start thinking, ‘how could this benefit society?’” he says.

With this in mind, many researchers creating nanorobots for medical purposes are working more closely with clinicians than ever before. “You find a lot of young doctors who are really interested in what the new technologies can do,” Nelson says. Neurologist Philipp Gruber, who works with stroke patients at Aarau Cantonal Hospital in Switzerland, began a collaboration with Nelson two years ago after contacting ETH Zurich. The pair share an ambition to use steerable microbots to dissolve clots in people’s brains after ischaemic stroke — either mechanically, or by delivering a drug. “Brad knows everything about engineering,” says Gruber, “but we can advise about the problems we face in the clinic and the limitations of current treatment options.”

Sánchez tells a similar story: while he began talking to physicians around a decade ago, their interest has warmed considerably since his experiments in animals began three to four years ago. “We are still in the lab, but at least we are working with human cells and human organoids, which is a step forward,” says his collaborator Vilaseca.

As these seedlings of clinical collaborations take root, it is likely that oncology applications will be the earliest movers — particularly those that resemble current treatments, such as infusing microbots instead of BCG into cancerous bladders. But even these therapeutic uses are probably at least 7–10 years away. In the nearer term, there might be simpler tasks that nanobots can be used to accomplish, according to those who follow the field closely.

For example, Martin Pumera, a nanoroboticist at the University of Chemistry and Technology in Prague, is interested in improving dental care by landing nanobots beneath titanium tooth implants10. The tiny gap between the metal implants and gum tissue is an ideal niche for bacterial biofilms to form, triggering infection and inflammation. When this happens, the implant must often be removed, the area cleaned, and a new implant installed — an expensive and painful procedure. He is collaborating with dental surgeon Karel Klíma at Charles University in Prague.

Another problem the two are tackling is oral bacteria gaining access to tissue during surgery of the jaws and face. “A biofilm can establish very quickly, and that can mean removing titanium plates and screws after surgery, even before a fracture heals,” says Klíma. A titanium oxide robot could be administered to implants using a syringe, then activated chemically or with light to generate active oxygen species to kill the bacteria. Examples a few micrometres in length have so far been constructed, but much smaller bots — only a few hundred nanometres in length — are the ultimate aim.

Clearly, this is a long way from parachuting bots into hard-to-reach tumours deep inside a person. But the rising tide of in vivo experiments and the increasing involvement of clinicians suggests that microrobots might just be leaving port on their long journey towards the clinic.

doi: https://doi.org/10.1038/d41586-022-00859-0

This article is part of Nature Outlook: Robotics and artificial intelligence, an editorially independent supplement produced with the financial support of third parties. About this content.

References

- Ghosh, A. & Fischer, P. Nano Lett. 9, 2243–2245 (2009).PubMed Article Google Scholar

- Wu, Z. et al. Sci. Adv. 4, eaat4388 (2018).PubMed Article Google Scholar

- Gao, C. et al. ACS Appl. Mater. Interfaces 11, 23392–23400 (2019).PubMed Article Google Scholar

- Xu, H. et al. ACS Nano 12, 327–337 (2018).PubMed Article Google Scholar

- Yan, X. et al. Sci. Robot. 2, eaaq1155 (2017).PubMed Article Google Scholar

- Hortelao, A. C. et al. Sci. Robot. 6, eabd2823 (2021).PubMed Article Google Scholar

- Gao, W. et al. ACS Nano 9, 117–123 (2015).PubMed Article Google Scholar

- Felfoul, O. et al. Nature Nanotechnol. 11, 941–947 (2016).PubMed Article Google Scholar

- Ye, Y. et al. Nano Lett. 21, 8086–8094 (2021).PubMed Article Google Scholar

- Villa, K. et al. Cell Rep. Phys. Sci. 1, 100181 (2020).Article Google Scholar

This article was authored by Marcus Woo and originally published to Nature

Fork in hand, a robot arm skewers a strawberry from above and delivers it to Tyler Schrenk’s mouth. Sitting in his wheelchair, Schrenk nudges his neck forward to take a bite. Next, the arm goes for a slice of banana, then a carrot. Each motion it performs by itself, on Schrenk’s spoken command.

For Schrenk, who became paralysed from the neck down after a diving accident in 2012, such a device would make a huge difference in his daily life if it were in his home. “Getting used to someone else feeding me was one of the strangest things I had to transition to,” he says. “It would definitely help with my well-being and my mental health.”

His home is already fitted with voice-activated power switches and door openers, enabling him to be independent for about 10 hours a day without a caregiver. “I’ve been able to figure most of this out,” he says. “But feeding on my own is not something I can do.” Which is why he wanted to test the feeding robot, dubbed ADA (short for assistive dexterous arm). Cameras located above the fork enable ADA to see what to pick up. But knowing how forcefully to stick a fork into a soft banana or a crunchy carrot, and how tightly to grip the utensil, requires a sense that humans take for granted: “Touch is key,” says Tapomayukh Bhattacharjee, a roboticist at Cornell University in Ithaca, New York, who led the design of ADA while at the University of Washington in Seattle. The robot’s two fingers are equipped with sensors that measure the sideways (or shear) force when holding the fork1. The system is just one example of a growing effort to endow robots with a sense of touch.

Part of Nature Outlook: Robotics and artificial intelligence

“The really important things involve manipulation, involve the robot reaching out and changing something about the world,” says Ted Adelson, a computer-vision specialist at the Massachusetts Institute of Technology (MIT) in Cambridge. Only with tactile feedback can a robot adjust its grip to handle objects of different sizes, shapes and textures. With touch, robots can help people with limited mobility, pick up soft objects such as fruit, handle hazardous materials and even assist in surgery. Tactile sensing also has the potential to improve prosthetics, help people to literally stay in touch from afar, and even has a part to play in fulfilling the fantasy of the all-purpose household robot that will take care of the laundry and dishes. “If we want robots in our home to help us out, then we’d want them to be able to use their hands,” Adelson says. “And if you’re using your hands, you really need a sense of touch.”

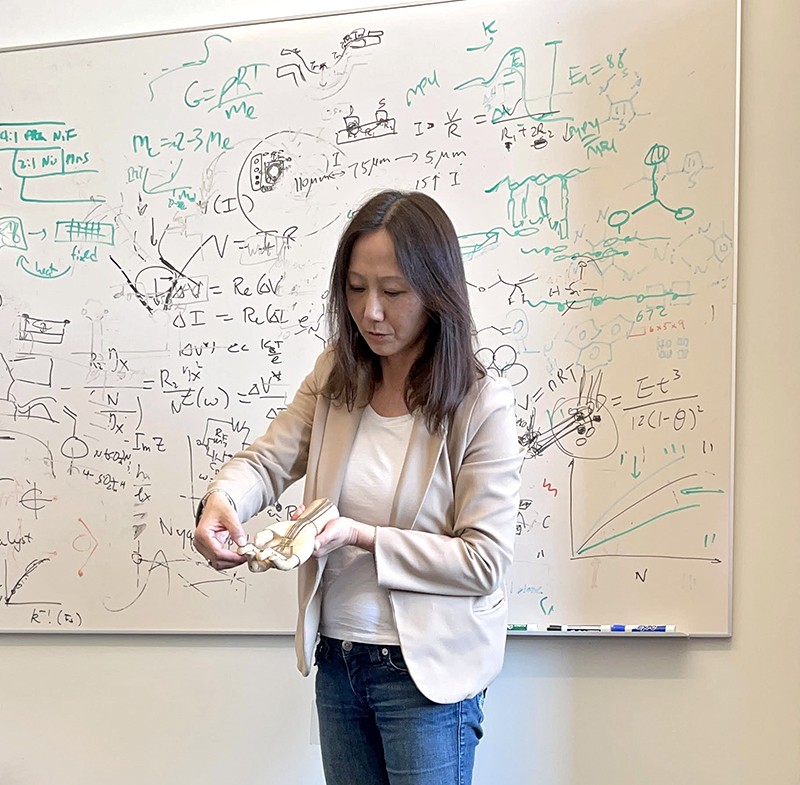

With this goal in mind, and buoyed by advances in machine learning, researchers around the world are developing myriad tactile sensors, from finger-shaped devices to electronic skins. The idea isn’t new, says Veronica Santos, a roboticist at the University of California, Los Angeles. But advances in hardware, computational power and algorithmic knowhow have energized the field. “There is a new sense of excitement about tactile sensing and how to integrate it with robots,” Santos says.

Feel by sight

One of the most promising sensors relies on well-established technology: cameras. Today’s cameras are inexpensive yet powerful, and combined with sophisticated computer vision algorithms, they’ve led to a variety of tactile sensors. Different designs use slightly different techniques, but they all interpret touch by visually capturing how a material deforms on contact.

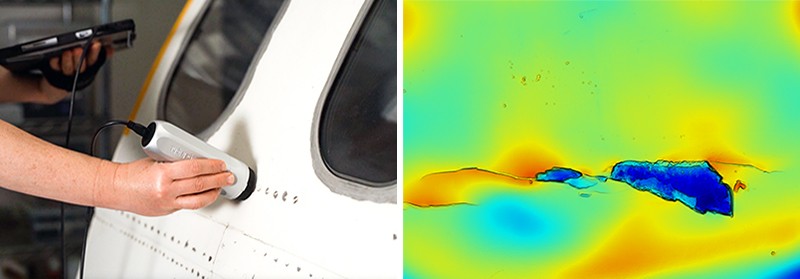

ADA uses a popular camera-based sensor called GelSight, the first prototype of which was designed by Adelson and his team more than a decade ago2. A light and a camera sit behind a piece of soft rubbery material, which deforms when something presses against it. The camera then captures the deformation with super-human sensitivity, discerning bumps as small as one micrometre. GelSight can also estimate forces, including shear forces, by tracking the motion of a pattern of dots printed on the rubbery material as it deforms2.

GelSight is not the first or the only camera-based sensor (ADA was tested with another one, called FingerVision). However, its relatively simple and easy-to-manufacture design has so far set it apart, says Roberto Calandra, a research scientist at Meta AI (formerly Facebook AI) in Menlo Park, California, who has collaborated with Adelson. In 2011, Adelson co-founded a company, also called GelSight, based on the technology he had developed. The firm, which is based in Waltham, Massachusetts, has focused its efforts on industries such as aerospace, using the sensor technology to inspect for cracks and defects on surfaces.

One of the latest camera-based sensors is called Insight, documented this year by Huanbo Sun, Katherine Kuchenbecker and Georg Martius at the Max Planck Institute for Intelligent Systems in Stuttgart, Germany3. The finger-like device consists of a soft, opaque, tent-like dome held up with thin struts, hiding a camera inside.

It’s not as sensitive as GelSight, but it offers other advantages. GelSight is limited to sensing contact on a small, flat patch, whereas Insight detects touch all around its finger in 3D, Kuchenbecker says. Insight’s silicone surface is also easier to fabricate, and it determines forces more precisely. Kuchenbecker says that Insight’s bumpy interior surface makes forces easier to see, and unlike GelSight’s method of first determining the geometry of the deformed rubber surface and then calculating the forces involved, Insight determines forces directly from how light hits its camera. Kuchenbecker thinks this makes Insight a better option for a robot that needs to grab and manipulate objects; Insight was designed to form the tips of a three-digit robot gripper called TriFinger.

Skin solutions

Camera-based sensors are not perfect. For example, they cannot sense invisible forces, such as the magnitude of tension of a taut rope or wire. A camera’s frame-rate might also not be quick enough to capture fleeting sensations, such as a slipping grip, Santos says. And squeezing a relatively bulky camera-based sensor into a robot finger or hand, which might already be crowded with other sensors or actuators (the components that allow the hand to move) can also pose a challenge.

This is one reason other researchers are designing flat and flexible devices that can wrap around a robot appendage. Zhenan Bao, a chemical engineer at Stanford University in California, is designing skins that incorporate flexible electronics and replicate the body’s ability to sense touch. In 2018, for example, her group created a skin that detects the direction of shear forces by mimicking the bumpy structure of a below-surface layer of human skin called the spinosum4.

When a gentle touch presses the outer layer of human skin against the dome-like bumps of the spinosum, receptors in the bumps feel the pressure. A firmer touch activates deeper-lying receptors found below the bumps, distinguishing a hard touch from a soft one. And a sideways force is felt as pressure pushing on the side of the bumps.

Bao’s electronic skin similarly features a bumpy structure that senses the intensity and direction of forces. Each one-millimetre bump is covered with 25 capacitors, which store electrical energy and act as individual sensors. When the layers are pressed together, the amount of stored energy changes. Because the sensors are so small, Bao says, a patch of electronic skin can pack in a lot of them, enabling the skin to sense forces accurately and aiding a robot to perform complex manipulations of an object.

To test the skin, the researchers attached a patch to the fingertip of a rubber glove worn by a robot hand. The hand could pat the top of a raspberry and pick up a ping-pong ball without crushing either.

Although other electronic skins might not be as sensor-dense, they tend to be easier to fabricate. In 2020, Benjamin Tee, a former student of Bao who now leads his own laboratory at the National University of Singapore, developed a sponge-like polymer that can sense shear forces5. Moreover, similar to human skin, it is self-healing: after being torn or cut, it fuses back together when heated and stays stretchy, which is useful for dealing with wear and tear.

The material, dubbed AiFoam, is embedded with flexible copper wire electrodes, roughly emulating how nerves are distributed in human skin. When touched, the foam deforms and the electrodes squeeze together, which changes the electrical current travelling through it. This allows both the strength and direction of forces to be measured. AiFoam can even sense a person’s presence just before they make contact — when their finger comes within a few centimetres, it lowers the electric field between the foam’s electrodes.

Last November, researchers at Meta AI and Carnegie Mellon University in Pittsburgh, Pennsylvania, announced a touch-sensitive skin comprising a rubbery material embedded with magnetic particles6. Dubbed ReSkin, when it deforms the particles move along with it, changing the magnetic field. It is designed to be easily replaced — it can be peeled off and a fresh skin installed without requiring complex recalibration — and 100 sensors can be produced for less than US$6.

Rather than being universal tools, different skins and sensors will probably lend themselves to particular purposes. Bhattacharjee and his colleagues, for example, have created a stretchable sleeve that fits over a robot arm and is useful for sensing incidental contact between a robotic arm and its environment7. The sheet is made from layered fabric that detects changes in electrical resistance when pressure is applied to it. It can’t detect shear forces, but it can cover a broad area and wrap around a robot’s joints.

Bhattacharjee is using the sleeve to identify not just when a robotic arm comes into contact with something as it moves through a cluttered environment, but also what it bumps up against. If a helper robot in a home brushed against a curtain while reaching for an object, it might be fine for it to continue, but contact with a fragile wine glass would require evasive action.

Other approaches use air to provide a sense of touch. Some robots use suction grippers to pick up and move objects in warehouses or in the oceans. In these cases, Hannah Stuart, a mechanical engineer at the University of California, Berkeley, is hoping that measuring suction airflow can provide tactile feedback to a robot. Her group has shown that the rate of airflow can determine the strength of the suction gripper’s hold and even the roughness of the surface it is suckered on to8. And underwater, it can reveal how an object moves while being held by a suction-aided robot hand9.

Processing feelings

Today’s tactile technologies are diverse, Kuchenbecker says. “There are multiple feasible options, and people can build on the work of others,” she says. But designing and building sensors is only the start. Researchers then have to integrate them into a robot, which must then work out how to use a sensor’s information to execute a task. “That’s actually going to be the hardest part,” Adelson says.

For electronic skins that contain a multitude of sensors, processing and analysing data from them all would be computationally and energy intensive. To handle so many data, researchers such as Bao are taking inspiration from the human nervous system, which processes a constant flood of signals with ease. Computer scientists have been trying to mimic the nervous system with neuromorphic computers for more than 30 years. But Bao’s goal is to combine a neuromorphic approach with a flexible skin that could integrate with the body seamlessly — for example, on a bionic arm.

Sign up for Nature’s newsletter on robotics and AI

Unlike in other tactile sensors, Bao’s skins deliver sensory signals as electrical pulses, such as those in biological nerves. Information is stored not in the intensity of the pulses, which can wane as a signal travels, but instead in their frequency. As a result, the signal won’t lose much information as the range increases, she explains.

Pulses from multiple sensors would meet at devices called synaptic transistors, which combine the signals into a pattern of pulses — similar to what happens when nerves meet at synaptic junctions. Then, instead of processing signals from every sensor, a machine-learning algorithm needs only to analyse the signals from several synaptic junctions, learning whether those patterns correspond to, say, the fuzz of a sweater or the grip of a ball.

In 2018, Bao’s lab built this capability into a simple, flexible, artificial nerve system that could identify Braille characters10. When attached to a cockroach’s leg, the device could stimulate the insect’s nerves — demonstrating the potential for a prosthetic device that could integrate with a living creature’s nervous system.

Ultimately, to make sense of sensor data, a robot must rely on machine learning. Conventionally, processing a sensor’s raw data was tedious and difficult, Calandra says. To understand the raw data and convert them into physically meaningful numbers such as force, roboticists had to calibrate and characterize the sensor. With machine learning, roboticists can skip these laborious steps. The algorithms enable a computer to sift through a huge amount of raw data and identify meaningful patterns by itself. These patterns — which can represent a sufficiently tight grip or a rough texture — can be learnt from training data or from computer simulations of its intended task, and then applied in real-life scenarios.

“We’ve really just begun to explore artificial intelligence for touch sensing,” Calandra says. “We are nowhere near the maturity of other fields like computer vision or natural language processing.” Computer-vision data are based on a two-dimensional array of pixels, an approach that computer scientists have exploited to develop better algorithms, he says. But researchers still don’t fully know what a comparable structure might be for tactile data. Understanding the structure for those data, and learning how to take advantage of them to create better algorithms, will be one of the biggest challenges of the next decade.

Barrier removal

The boom in machine learning and the variety of emerging hardware bodes well for the future of tactile sensing. But the plethora of technologies is also a challenge, researchers say. Because so many labs have their own prototype hardware, software and even data formats, scientists have a difficult time comparing devices and building on one another’s work. And if roboticists want to incorporate touch sensing into their work for the first time, they would have to build their own sensors from scratch — an often expensive task, and not necessarily in their area of expertise.

This is why, last November, GelSight and Meta AI announced a partnership to manufacture a camera-based fingertip-like sensor called DIGIT. With a listed price of $300, the device is designed to be a standard, relatively cheap, off-the-shelf sensor that can be used in any robot. “It definitely helps the robotics community, because the community has been hindered by the high cost of hardware,” Santos says.

Depending on the task, however, you don’t always need such advanced hardware. In a paper published in 2019, a group at MIT led by Subramanian Sundaram built sensors by sandwiching a few layers of material together, which change electrical resistance when under pressure11. These sensors were then incorporated into gloves, at a total material cost of just $10. When aided by machine learning, even a tool as simple as this can help roboticists to better understand the nuances of grip, Sundaram says.

Not every roboticist is a machine-learning specialist, either. To aid with this, Meta AI has released open source software for researchers to use. “My hope is by open-sourcing this ecosystem, we’re lowering the entry bar for new researchers who want to approach the problem,” Calandra says. “This is really the beginning.”

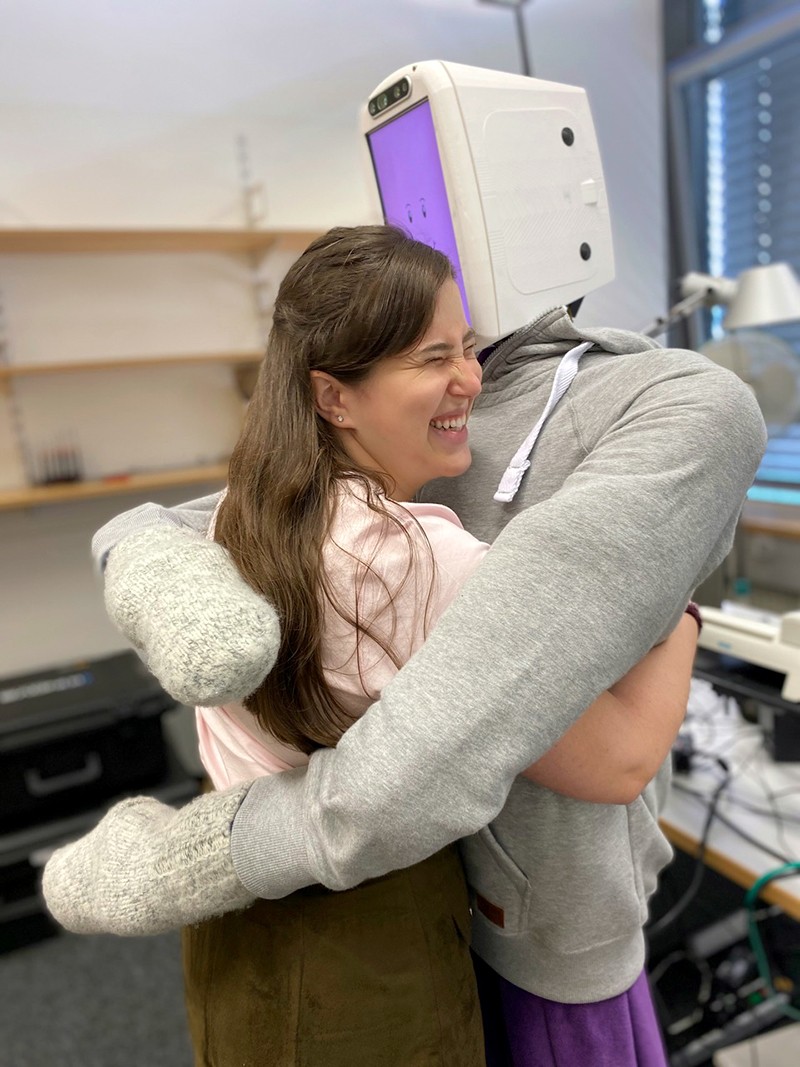

Although grip and dexterity continue to be a focus of robotics, that’s not all tactile sensing is useful for. A soft, slithering robot, might need to feel its way around to navigate rubble as part of search and rescue operations, for instance. Or a robot might simply need to feel a pat on the back: Kuchenbecker and her student Alexis Block have built a robot with torque sensors in its arms and a pressure sensor and microphone inside a soft, inflatable body that can give a comfortable and pleasant hug, and then release when you let go. That kind of human-like touch is essential to many robots that will interact with people, including prosthetics, domestic helpers and remote avatars. These are the areas in which tactile sensing might be most important, Santos says. “It’s really going to be the human–robot interaction that’s going to drive it.”

So far, robotic touch is confined mainly to research labs. “There’s a need for it, but the market isn’t quite there,” Santos says. But some of those who have been given a taste of what might be achievable are already impressed. Schrenk’s tests of ADA, the feeding robot, provided a tantalizing glimpse of independence. “It was just really cool,” he says. “It was a look into the future for what might be possible for me.”

doi: https://doi.org/10.1038/d41586-022-01401-y

This article is part of Nature Outlook: Robotics and artificial intelligence, an editorially independent supplement produced with the financial support of third parties. About this content.

References

- Song, H., Bhattacharjee, T. & Srinivasa, S. S. 2019 International Conference on Robotics and Automation 8367–8373 (IEEE, 2019).Google Scholar

- Yuan, W., Dong, S. & Adelson, E. H. Sensors 17, 2762 (2017).Article Google Scholar

- Sun, H., Kuchenbecker, K. J. & Martius, G. Nature Mach. Intell. 4, 135–145 (2022).Article Google Scholar

- Boutry, C. M. et al. Sci. Robot. 3, aau6914 (2018).Article Google Scholar

- Guo, H. et al. Nature Commun. 11, 5747 (2020).PubMed Article Google Scholar

- Bhirangi, R., Hellebrekers, T., Majidi, C. & Gupta, A. Preprint at http://arxiv.org/abs/2111.00071 (2021).

- Wade, J., Bhattacharjee, T., Williams, R. D. & Kemp, C. C. Robot. Auton. Syst. 96, 1–14 (2017).Article Google Scholar

- Huh, T. M. et al. 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems 1786–1793 (IEEE, 2021).Google Scholar

- Nadeau, P., Abbott, M., Melville, D. & Stuart, H. S. 2020 IEEE International Conference on Robotics and Automation 3701–3707 (IEEE, 2020).Google Scholar

- Kim, Y. et al. Science 360, 998–1003 (2018).PubMed Article Google Scholar

- Sundaram, S. et al. Nature 569, 698–702 (2019).PubMed Article Google Scholar